In Eli Review, writers can rate the feedback they received based on how helpful they found it. Helpfulness ratings allow writers to show reviewers the extent to which a comment will help them revise. Writers see reviewers’ comments (with a link to the contextual location in the draft where the reviewer left that comment).

While we acknowledge that not all instructors or courses aim to teach better commenting, this tutorial is for instructors who want to make giving feedback as rigorous as writing drafts. It addresses why instructors might consider encouraging students to use the helpfulness ratings, strategies for discussing helpfulness ratings, and summarizes analytics around helpfulness.

Eli Review’s helpfulness rating is the result of research led by Bill Hart-Davidson (2003, 2007, 2010) in the Writing in Digital Environments lab at Michigan State; it’s one of the key features that originally made Eli distinctive and continues to shape its development trajectory. Bill traces his inspiration to Lester Faigley’s 1985 article in Writing in Nonacademic Settings:

A text is written in orientation to previous texts of the same kind and on the same subjects; it inevitably grows out of some concrete situation; and it inevitably provokes some response, even if it is simply discarded ( p. 54).

The helpfulness rating captures writers’ responses to each comment reviewers make. Like Amazon’s rating system, helpfulness ratings quantify writers’ satisfaction with reviewers’ feedback. It provides a quantitative snapshot of how helpful reviewers’ comments are. It also tells instructors how satisfied writers are with peer learning.

Eli’s learning analytics surface counts and averages that show the extent to which reviewer’s comments help writers revise. By consistently talking with students about the qualities of helpful comments and by using the analytics about the quality (and quantity) of feedback, Eli Review helps instructors see if reviewers are giving better (and more) feedback.

Eli Review’s tools for teaching better comments are unique. Most other systems position students as graders, and these systems adjust scores to reduce bias among reviewers. Writers get single scores or scores per category, and reviewers get single scores for the accuracy of their feedback. Such peer grading systems have limited tools for helping reviewers get better at writing helpful comments or for helping writers address disagreement among readers.

Like all reputation systems, helpfulness ratings are most useful when participants share common expectations for high and low scores. Helpfulness ratings are always available to writers, but they are optional (instructors can’t require them).

This section outlines six strategies for engaging students with Eli’s helpfulness ratings in a way that’s productive and useful both for writing coaches and for reviewers:

Writers should determine what is helpful in Eli because they need feedback in order to revise. Writers can think about helpfulness ratings as a way of telling reviewers how much a comment inspired them to make big changes. A helpfulness rating is a low-stakes way for writers to take ownership, of asking for what they need from relationships and processes. Instructors can explain that asking for what you need is an important skill in professional and personal settings; by asking for helpful feedback, writers help reviewers improve too.

If students read Feedback and Improvement, they learned about a heuristic: Helpful feedback describes what the reviewer sees in the draft, evaluates that passage in light of the criteria, and suggests a specific improvement. By flipping that pattern, writers can ask:

- “Does this comment help me understand my writing any better?”

- “Does this comment help me to measure my progress toward the assignment goals?”

- “Does this comment offer any specific advice that I can follow when I revise?”

With their answers in mind, writers can rate the comment on a five star scale. This resource and video cover this helpfulness scale.

Give one star for each part of the comment:

- Description–Does the comment paraphrase main ideas or goals?

- Evaluation--Does the comment apply specific criteria and explain?

- Suggestion–Does the comment offer a next step?

- Kindness–Does the comment respect the draft and writer?

- Transformation–Does the comment inspire big, bold revisions?

These questions and ratings are also useful when discussing exemplary or unhelpful comments with the class. By re-using the describe-evaluate-suggest heuristic and by talking about the helpfulness rating scale explicitly in class, instructors reinforce the kinds of thinking that lead to better revisions.

Grading reviews based on helpfulness ratings will likely result in scores skewed toward 5 because students often want to protect each other’s grades. Worried about grades, they won’t ask for the help they need to revise as writers.

We discourage using average helpfulness rating to grade reviewers’ contributions to peer learning. In the grading tutorial, we describe ways instructors might grade single reviews and all reviews.

We find that making an analogy to Amazon works: If everyone gives every product 5 stars in Amazon, the reviews are not helpful in telling us if the product is valuable. Most comments should get 3 stars, not because they are bad, but because that’s average. Making the analogy to a bell curve can also help students visualize that most comments should fall in the middle; writers should reserve 5 and 1 for the extremes.

Students won’t believe you. They won’t believe that it is possible to get an A if they only earn 3 stars as a reviewer, so they will give 4-5 stars in hopes that peers will be as generous to them. Emphasize 3 stars as the average you expect.

We find that two activities focus students’ attention on helpfulness ratings:

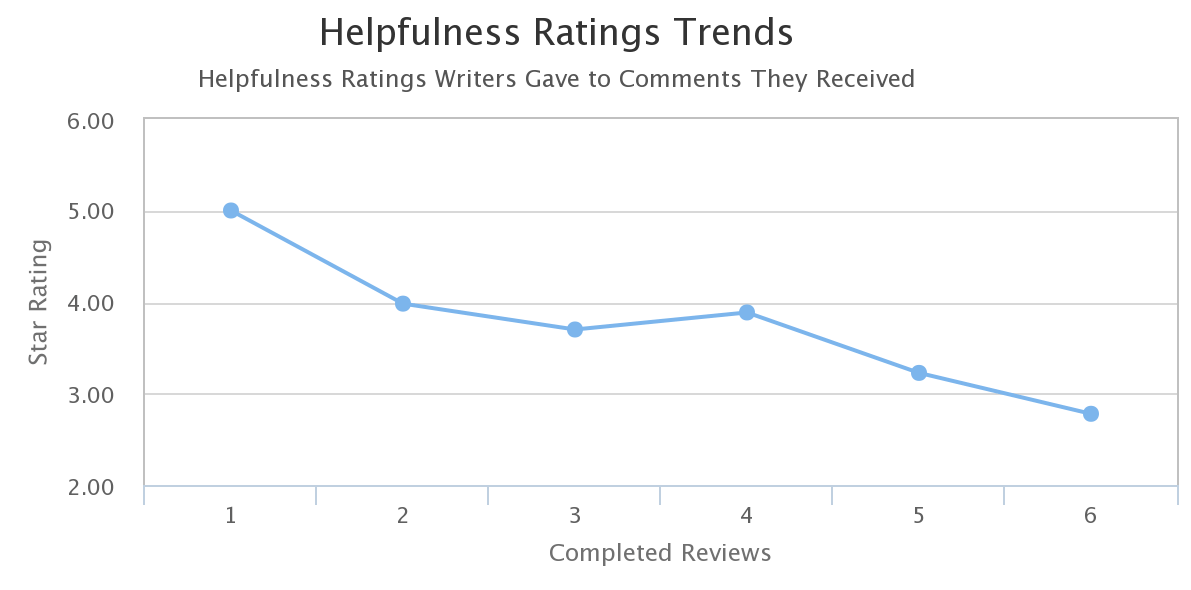

Eli Review analytics around helpfulness ratings provide formative feedback for instructors about how helpful reviewers’ comments are for writers’ revisions. While we discourage grades derived from single helpfulness ratings, aggregate helpfulness ratings can help instructors and students assess growth as reviewers so long as those numbers are contextualized by the other qualities of helpful feedback.

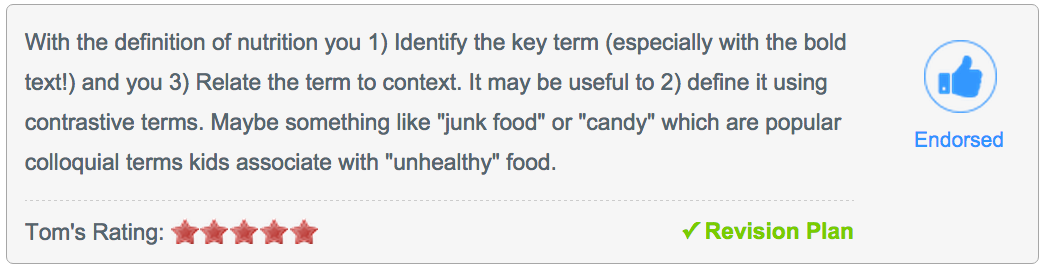

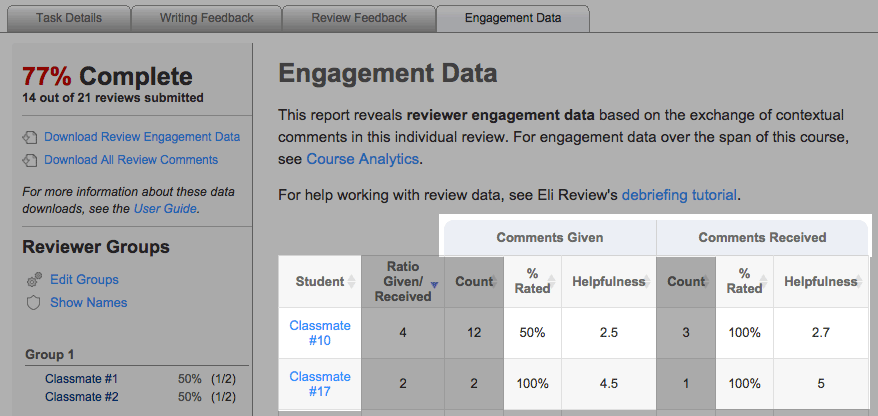

The Engagement Data report from any review will show instructors how writers rated the comments they received:

Instructors can also explore the feedback writer’s received in more depth by clicking on a student’s name, which will open that student’s review report. If writers rated all comments with 1-3 stars or added few comments to revision plans, instructors can ask:

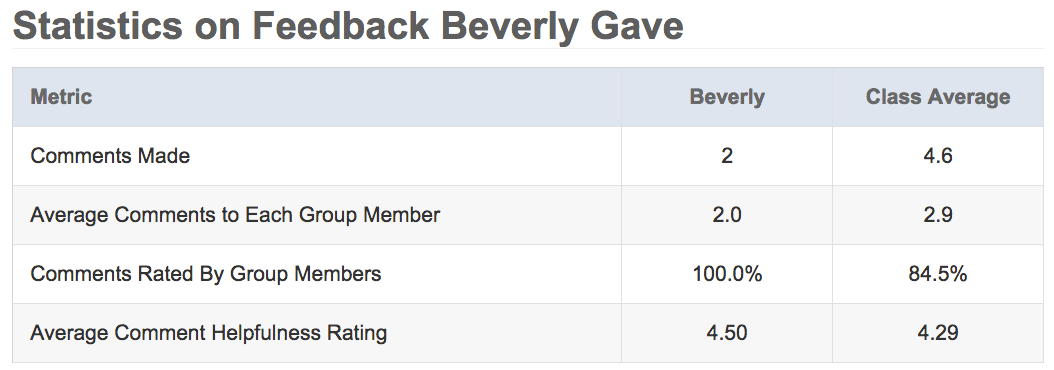

The Engagement Data report pictured above will also reveal data about the comments a student gave, but instructors can also see an aggregate view of the comments exchanged between reviewers and writers by clicking that student’s name:

The review feedback tab in the review report shows total and average counts of comments, which are helpful for knowing if students met the minimum number of required comments and for knowing the extent to which writers have used helpfulness ratings.

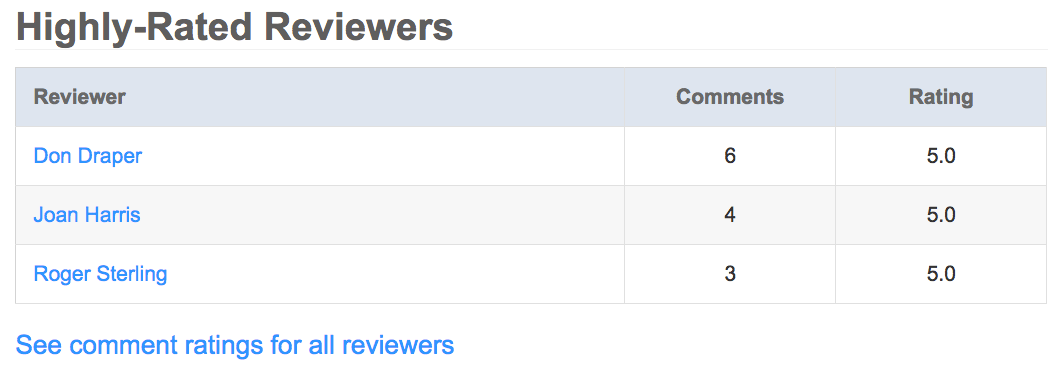

These reviewer analytics also indicate which reviewers and which comments were the highest rated. These exemplary students and comments can be discussed as models, perhaps using the flipped describe-evaluate-suggest pattern discussed above.

The most complete analytics of reviewer’s work is available by clicking “see comment ratings for all reviewers.” Instructors can see their class ranked highest-to-lowest by helpfulness rating. Using this view allows instructors to quickly tell who may need additional coaching on giving helpful feedback.

Clicking on a student’s name allows the instructor to see the individual student’s full report: the totals, averages, and actual comments (rated/unrated, added to revision plan/unadded, endorsed/not endorsed). This digest of comments given can help the instructor better coach the reviewer. As you assess the review feedback, keep in mind that sometimes a reviewer’s helpfulness score reflects the writer’s skew (i.e., too generous with the stars; too stingy with the stars; not engaged in rating comments).

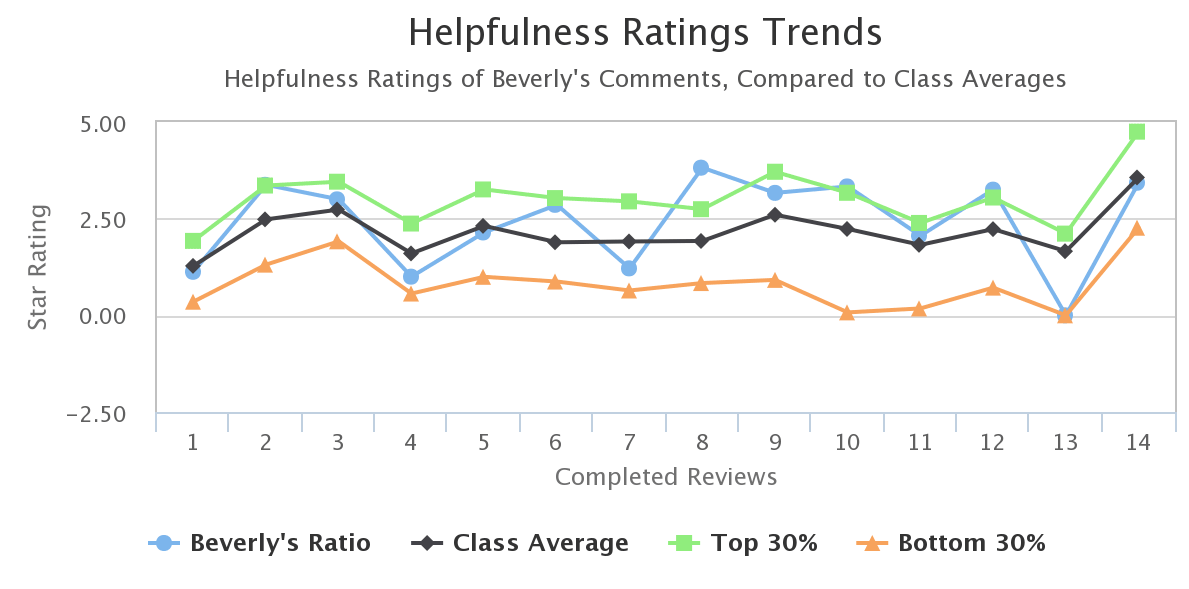

Eli also produces analytics that can help instructors assess students’ work as reviewers across multiple reviews.

From the course-level analytics report, there are two primary ways to check helpfulness ratings:

You can access the analytics report for individual students from the course roster or by clicking on any student’s name in Course Analytics reports.

The student analytics reveal the following:

For more on working with the analytics produced during review activities, see our development module on evidence-based teaching.

Faigley, Lester. “Nonacademic writing: The social perspective.” Writing in nonacademic settings (1985): 231-248.

Hart-Davidson, William. “Seeing the project: mapping patterns of intra-team communication events.” Proceedings of the 21st annual international conference on Documentation. ACM, 2003.

Hart-Davidson, William, Clay Spinuzzi, and Mark Zachry. “Capturing & visualizing knowledge work: results & implications of a pilot study of proposal writing activity.” Proceedings of the 25th annual ACM international conference on Design of communication. ACM, 2007.

Hart-Davidson, W., McLeod, M., Klerkx, C., & Wojcik, M. (2010, September). A method for measuring helpfulness in online peer review. In Proceedings of the 28th ACM International Conference on Design of Communication (pp. 115-121). ACM.

This tutorial covers how helpfulness ratings encourage better feedback. Related resources include: