- What is endosymbiotic theory?

- How does the flipase enzyme affect molecules in the cell membrane?

- How do you distinguish between saturated and unsaturated chains?

- How will two amino acids interact in a folded protein?

- Where do cell membranes come from?

Students in Biology 350 Cell Biology at San Francisco State University wholeheartedly agreed that these muddiest points could only be addressed by Prof. Jonathan Knight.

The muddiest point writing activity developed by Mosteller has been a feature of this large lecture course for several years. At the end of class, students would write down their questions about the day’s materials on an index card and turn them in as they left the room. Knight then read and sorted the muddy cards, identifying patterns that he could address during the next class session.

Now that the lecture meets in Zoom, Knight had to find a new index card.

How does muddiest point writing work in Eli Review?

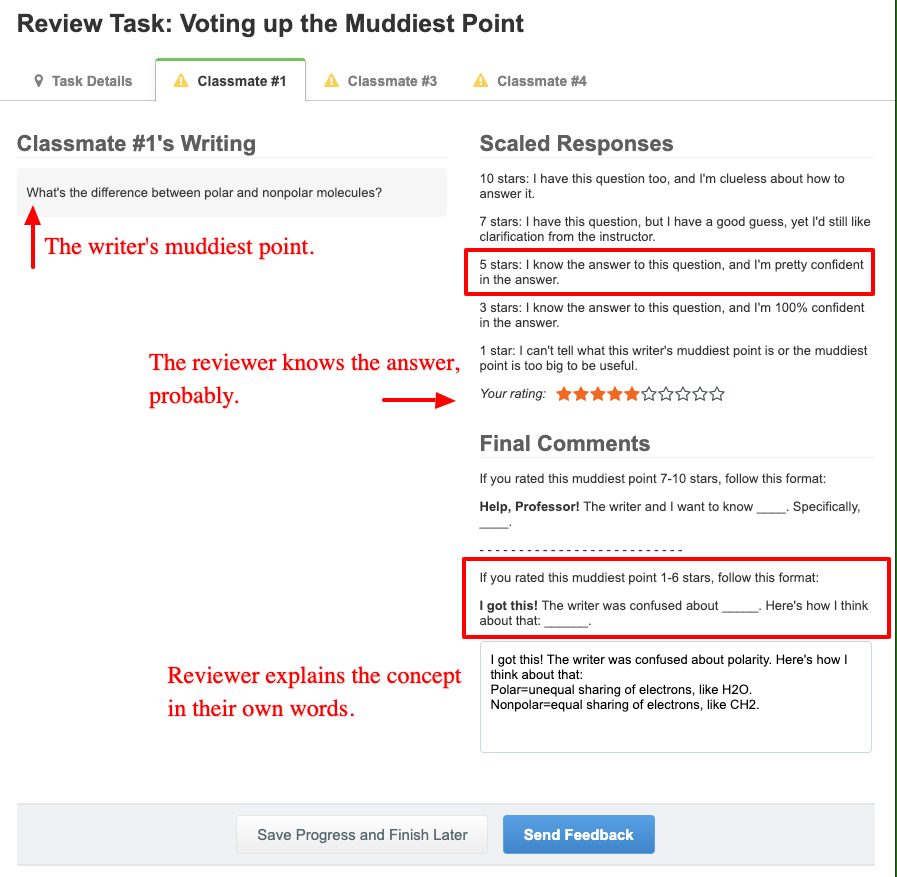

Asking students to submit their muddiest point in Eli Review also allows them to crowdsource their answers. Students nominate muddiest points for Knight to answer or they answer the question themselves. Here’s a student’s view of a peer’s muddiest point and the feedback they gave:

In peer review groups, students see what topics confuse 3-4 other students, and they have the chance to explain what they know or to echo their peers’ confusion.

Adding crowdsourcing gives students an important opportunity to put the science in their own words in a low-stakes assignment. It also gives them a chance to realize that they are not alone in their confusion. Most of all, giving feedback helps students understand that they have something to offer others.

How’s it Going?

After three crowdsourcing activities in this 200 student course, Knight has several observations about how well the activity is going.

Some but not enough students are effectively explaining concepts.

By reading through reviewers’ feedback to writers, Knight can tell that about sixty students are providing effective explanations each time.

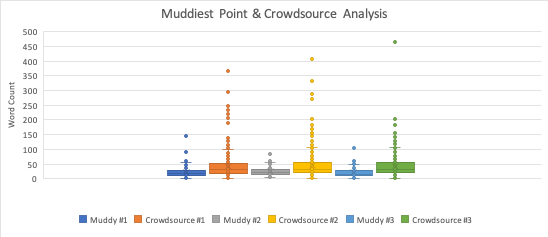

In each crowdsourcing activity, most students are writing 30-50 words or about two sentences. The top contributors are writing about 100 words or 4 sentences of explanation.

Too many students are avoiding the explanations.

Reviewers rate muddiest points 7-10 stars if they’d like the professor to answer, and 3-6 if they have a good idea about how to answer, and 1-2 stars if the muddiest point is too confusing/not relevant.

Crowdsourcing is splitting the muddiest points in half:

- 48% are rated as needing the professor’s clarification.

- 51% are rated as topics reviewers feel like they can address.

After reading through the muddiest points, Knight feels confident that students should be able to answer more of the questions on their own. A more desirable split would be 30% need a professor’s explanation and 70% could be answered by another student.

Or maybe, a 50/50% is really desirable, and the activity is working well as well as it can. In this pilot of crowdsourcing muddiest points, Knight is testing his own assumptions about effective outcomes.

After the third activity, Knight spent class time talking with students about why crowdsourcing answers can benefit them. Going forward, putting more emphasis on how students can use the activity to practice concepts and ideas may help more students take on the muddiest points themselves.

More students than usual are writing that they have no muddiest points.

10-15% of muddiest points say: “I don’t have a muddiest point today.” That’s far more that usual when the activity happened on index cards.

Perhaps the immediacy of the notecard is lost when students have to type their muddiest point.

Maybe the loss of privacy due to crowdsourced peer learning activity reduces students’ willingness to write down their confusion. Even though the activity is anonymous, maybe some students are too uncomfortable sharing their muddiest points with peers.

Or maybe this trend represents a loss of intimacy with the professor. An anonymous index card read only by your professor offers an instructor-student connection.The crowdsourcing activity interrupts that connection. Even though the professor has full access to all the drafts and all the crowdsourced answers, maybe students think they have fewer chances of being read by the professor.

Or, it could be that the percentage of students without muddiest points online is the same as with the index cards. Students might not have bothered to turn in an index card without a question, but in the online environment their participation is tracked. They are forced to submit something to earn “complete” for the task.

What next?

Finding a new muddiest points index card for online classes is helping Knight engage students in different kinds of student-student and student-instructor interactions. In a recent Inside Higher Ed article, Alexander Astin observed:

According to the research, the greatest challenge for online educators concerns two activities that have the most potential for enhancing student involvement and learning: contact with faculty and contact with fellow students. In other words, the most impactful undergraduate experiences appear to be effective because they increase student-faculty contact, student-student contact or both. With distance education, of course, the student typically has little opportunity to experience personal contact with either faculty members or fellow students, so the challenge for the online instructor who seeks to maximize student involvement is formidable.

Knight’s approach is not formidable at all. In fact, it’s so easy the activity is assigned most weeks and available to all Eli Review users from our libary of tasks. And there’s room in this simple activity and the follow-up conversations to put more emphasis on instructor-student relationships and the importance of peer-to-peer learning.

So far, results from muddiest points crowdsourcing show consistent engagement across tasks. Participation rates and word counts are steady and consistent with engagement reported in other research (Cavali, 2018; Keeler et al., 2015; Simon-Beck, 2011).

For Knight, the information about students’ muddiest points is useful. Although it’s not the same as grouping index cards by theme, Knight uses Eli’s Tallied Response feature to read through students’ nominations and identify a few for the next class. The real benefit is skimming students’ explanations in the comment digest. It’s powerful to be able to see students telling each other how they think through a process or remember a concept.

The next step is to keep experimenting with ways to encourage students to engage with the content and each other through this low-stakes peer feedback activity. The class will try something new this week. Instead of writing about their confusion, students will answer: “What is the main take-away from today’s class?” Peer review then asks about agreement/disagreement and what they like or might revise about each other’s observations. Knight will be able to talk back to the class about what they understood and didn’t.

A new muddiest point index card creates even more feedback loops between students and their professors, and that’s a recipe for better learning.

References

Astin, Alexander. 2020. “Meeting the Instructional Challenge of Distance Education.” Inside Higher Education, September 30, 2020. https://www.insidehighered.com/advice/2020/09/30/pedagogical-practices-might-enhance-student-involvement-online-learning-opinion.

Mosteller, Frederick. April 1989. “The ‘Muddiest Point in the Lecture’ as a Feedback Device.” On Teaching and Learning: The Journal of the Harvard-Danforth Center, 10-21.

Research on Muddiest Points in Large Lectures

Cavalli, Matthew. 2018. “Comparing Muddiest Points and Learning Outcomes for Campus and Distance Students in a Composite Materials Course.” In ASEE Annual Conference and Exposition. Salt Lake City, Utah.

Keeler, Jessie, Bill Jay Brooks, Debra May Gilbuena, Jeffrey Nason, and Milo Koretsky. 2015. “What’s Muddy vs. What’s Surprising? Comparing Student Reflections about Class.” Seattle, WA: American Society for Engineering Education. https://stem.oregonstate.edu/files/stemfiles/ASEE_2015_Muddiest_Surprized_Reflection_Paper-1.pdf.

Simpson-Beck, Victoria. 2011. “Assessing Classroom Assessment Techniques.” Active Learning in Higher Education 12 (2): 125–32. https://doi.org/10.1177/1469787411402482.

Resources for Using Muddiest Points in Your Class

- Use Muddiest Points in Canvas

- Use Muddiest Points in Google, NearPod, or Socrative

- Use the Muddiest Points tasks in Eli Review