This post describes the methodology by which I’m trying to understand student success in my first-year writing course at a community college. In order to help this group of learners, I need to find out who is struggling and what kind of help they need as fast as I can—before I see the first paper draft, before they can fall into a pattern that will keep them from improving.

I offer a series of quick-enough analyses for instructors to reflect on student growth in their own classes:

- Where do learners start?

- What’s the frequency of practice?

- What’s the intensity of practice?

- What’s the outcome of practice?

- Who leveled up?

- Why did highly engaged reviewers improve?

Student success in a writing course depends on revision. Revision requires understanding the criteria and how to meet them. Setting revision goals helps students consciously articulate the criteria and their options for improvement.

As a writing instructor, my goal is for students to practice setting revision goals as often as possible. In peer review, students set revision goals for other writers by giving feedback. They set revision goals for themselves by using feedback to revise.

A peer review activity offers two indicators about whether students are on track to revise: feedback students give (reviewer labor) and feedback they use (writer labor). This methodology focuses on the frequency and intensity of reviewer labor as the primary indicator of revision potential and student success. Of the two indicators, reviewer labor is the easiest to see early.

The comments students write during a peer review show me how they are processing criteria. With enough of the right kind of words in a peer review comment, I can tell if they are on track or need a bit of extra help. This analysis focuses on enough words. With enough words, the right words are more likely.

Where do learners start?

To help them level up, teachers have to know where students start. Instead of using SAT or high school GPA or other standardized scores, I used a diagnostic response paper in which students read a passage, picked a suggested thesis, and wrote a paragraph supporting their selected thesis. The diagnostic highlights how well students can use quotes and paraphrases to make a claim. That skill is assessed in all five required papers, so isolating it upfront is useful for predicting student success in the course.

Based on that writing sample, I sorted two sections of first-year composition into three groups:

- 18 struggling writers

- 18 solid writers

- 12 strong writers

In the analyses that follow, students who did not submit at least two major papers have been excluded.

What’s the frequency of practice?

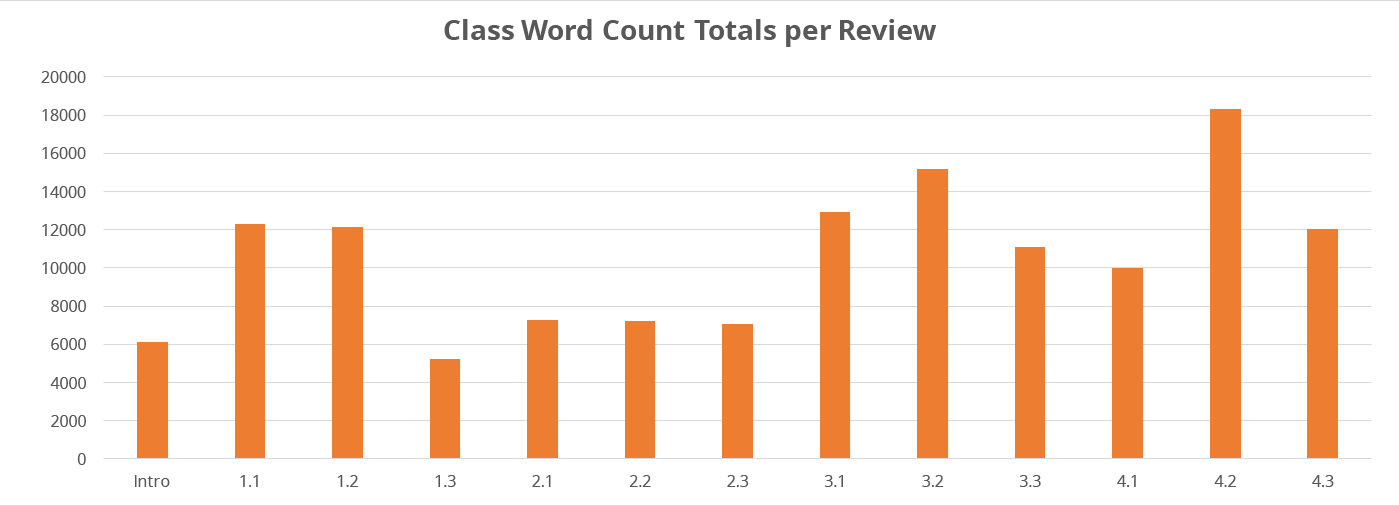

My class follows a near weekly write-review-revise schedule. In seventeen weeks, students completed 13 peer learning activities, including one introductory review and then three reviews for each of the four major papers.

My instruction focuses on giver’s gain, Bill Hart-Davidson’s catch phrase for describing the benefits of being a helpful reviewer. When reviewers detect problems and offer suggestions, they practice writings skills that set them up to carefully revise their own work. Comments are revision fuel for the reviewers who give them as well as for the writers who receive them.

What’s the intensity of practice?

Reviewers who are giving other writers a lot of feedback are working hard at producing revision fuel. Reviewers’ intensity is first visible in comment quantity and length. Comments that convince writers to revise describe the draft, evaluate it against criteria, and suggest revisions. These comments also are not too short.

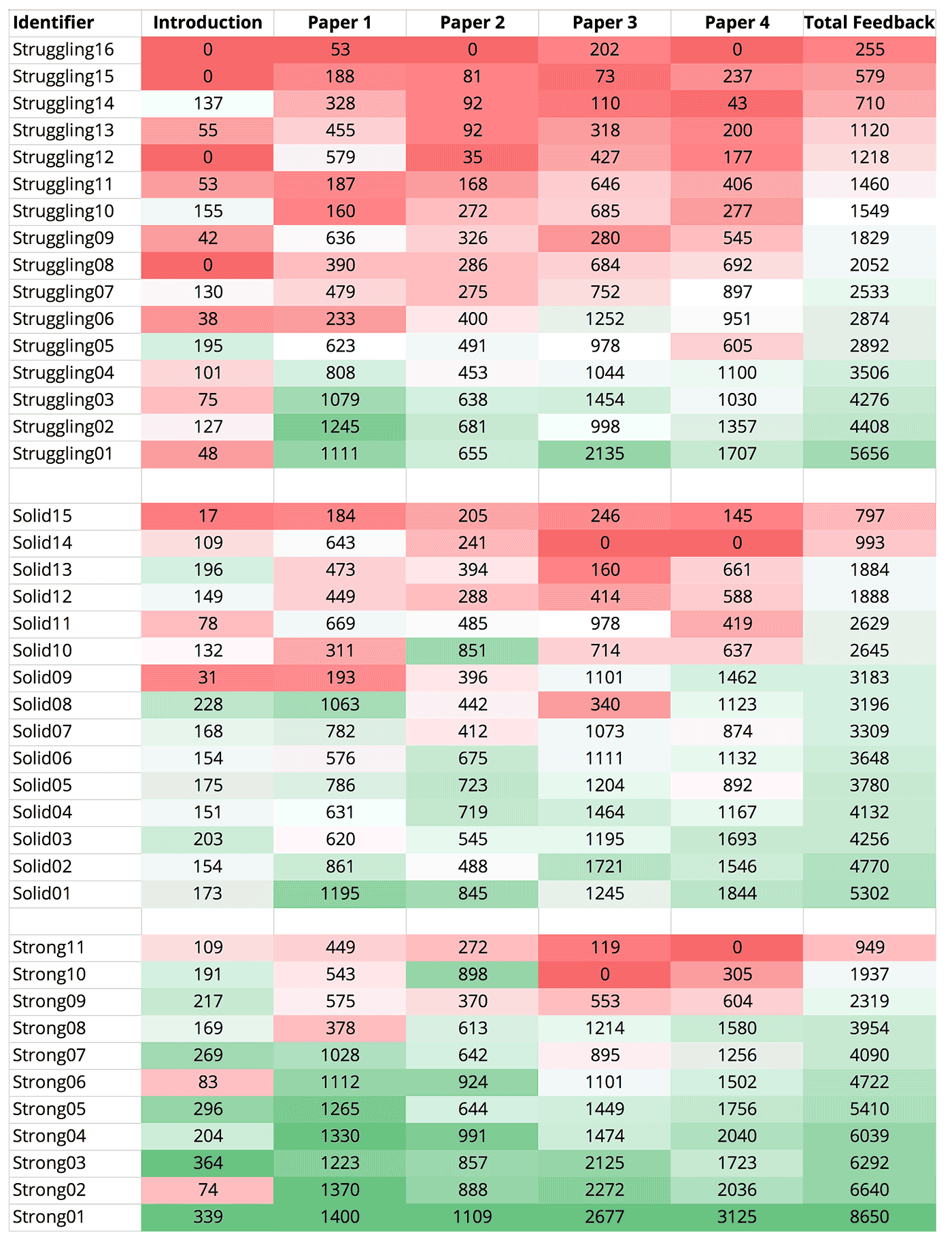

Word count works as a floor measure. Few words, little revision fuel. The heat map below shows word count of feedback given for the introductory review and for the combined three reviews related each major paper.

Where you see red at the top of the chart, these are students who are not practicing very hard. These reviewers of off-pace with the rest of the class in terms of how much revision fuel they are giving other writers. The green bands near the bottom of the chart show students who are writing a lot and likely pushing their limits enough to be learning.

For example, in Paper 1, most students in the red zone for word count wrote about 200 words in feedback in 3 reviewers. During the same time, most students in the green zone wrote 1,200 words. On average, green zone reviewers write about 100 words per review than the red zone reviewers. These are not small differences.

Students with green word counts are more likely than those with red word counts to be working with more criteria and making enough suggestions to other writers that it counts as effective practice. Students with lots of red word counts might have done some work, but they are unlikely to have gotten much practice with setting revision goals from it. They are probably rehearsing what they always say about writing rather than practicing to to say something more and different about writing. We can’t expect much improvement with this low level of engagement.

What’s the outcome of practice?

Students’ grades on each major paper aren’t ideal indicators of how much students learned about writing, especially when each paper gets harder. Yet, grades are the indicators that determine whether students move on to the next writing course or not.

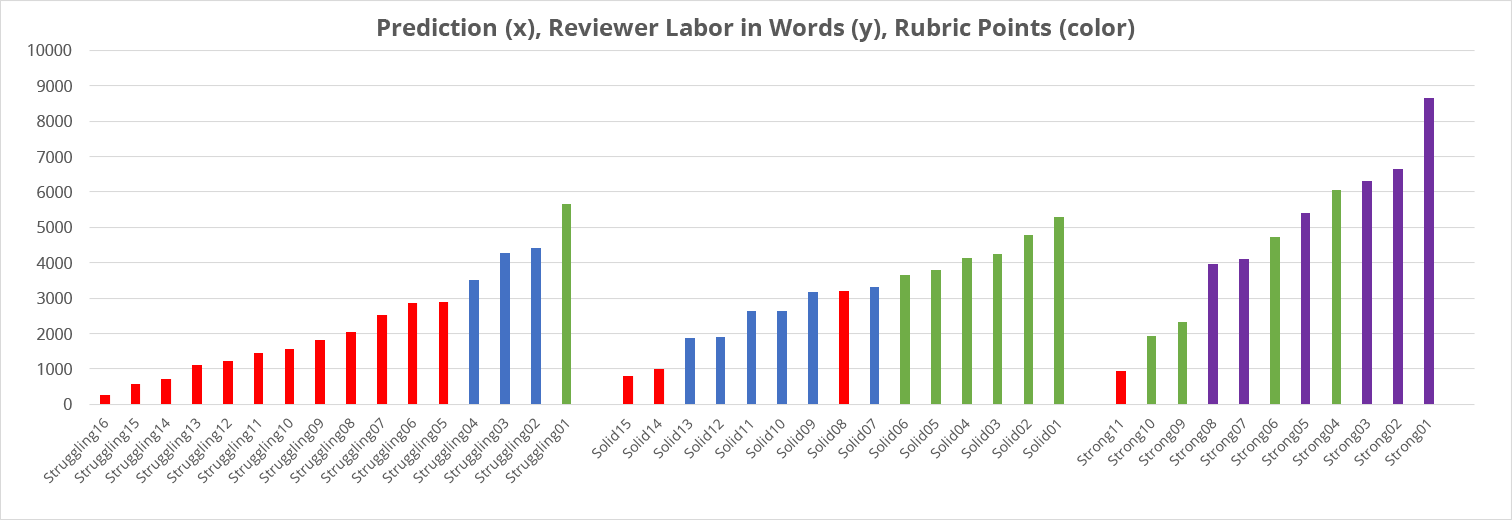

The graph below shows students according to my prediction, their effort in giving feedback (in words), and their grades on major papers (A=purple, B=green, C=blue, and D/F=red).

The vertical bars in this chart show peer norms, who outpaced the group, who kept the pace, and who fell off-pace in giving feedback among writers with similar skills according to the diagnostic. The colors in this chart also show how writers’ finished drafts ranked.

Who leveled up? Struggling writers 1-4 and solid writers 1-6 earned higher than predicted grades. Compared to their peers, these students wrote more words in feedback.

Who under-performed? Some students didn’t match the peers who scored similarly on the diagnostic: strong writer 11; solid writers 8, 14, & 15. Too little reviewer labor kept these writers from keeping up with their near-peers on final draft grades.

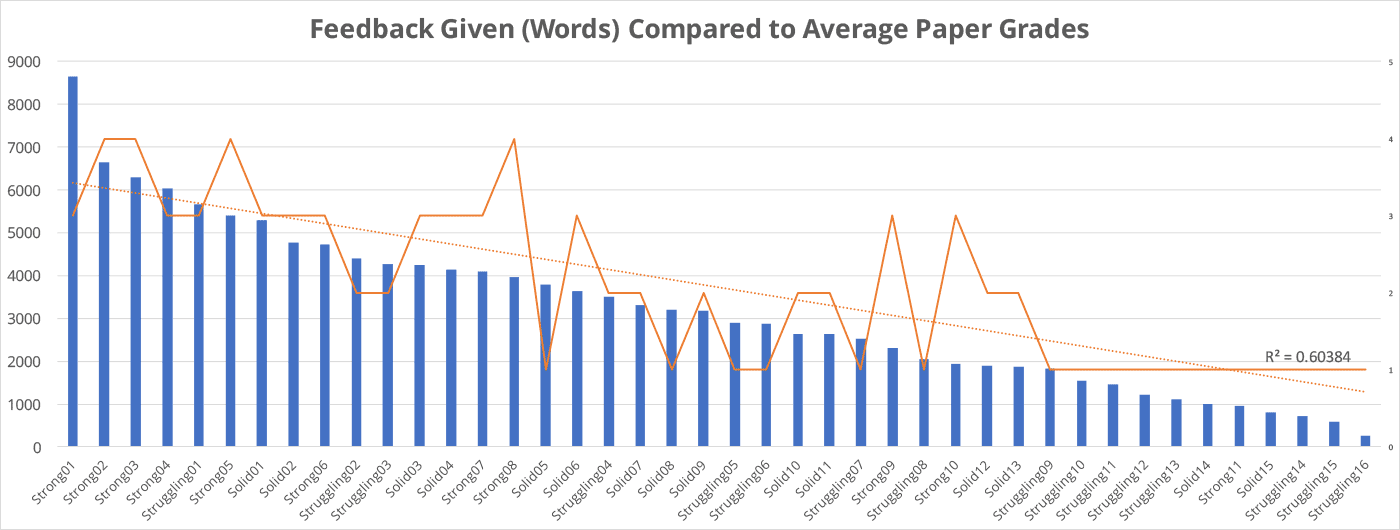

The effect of effort in giving feedback is more pronounced when looking at the average feedback given by students based on their major paper grades.

| Major Paper Grades | Number of Students | Average Total Feedback Given | Missed Reviews |

|---|---|---|---|

| 1 (D or F) | 17 | 1693 | 5+ or very short |

| 2 (C) | 11 | 3321 | 3+ or very short |

| 3 (B) | 10 | 4670 | 1 |

| 4 (A) | 6 | 5574 | 0 |

Peer norms based on writers’ final draft grades

Each level of performance wrote about 1,000 more words of feedback than the level below it. That’s four extra pages of writing per level. More writing practice leads to better writing.

Note that the big differences based on diagnostic groups, shown in the heatmap above, come from sandbagging review tasks by writing very short comments. In the table above, the big differences in final grades come from missed peer review activities. Those who sandbag as well as those who skip peer learning miss learning opportunities, and it shows.

Why did highly engaged reviewers improve?

We’d expect the strongest writers to be most engaged and write the longest comments. We’d expect struggling writers to write fewer words for lack of confidence and skill. Yet, the explanation that highly engaged reviewers improve no matter their predicted outcomes is powerful.

In the group of struggling writers, 10 of 16 missed 1-2 reviews, and only the 4 of those students with word counts similar to the solid writers outperformed their prediction. This is strong evidence that it’s not enough for struggling writers to just participate in giving feedback; to improve, struggling writers had to participate in giving feedback frequently and intensely.

Struggling writers needed to learn to detect more weaknesses in others’ drafts and say more about how to revise. Those who saw more and said more every week produce final papers earning a B or C.

This is the tricky part. Correlation is not causation. The correlation between feedback intensity and grades isn’t even particularly strong this term (correlation coefficient=-0.60). In other classes, the correlation coefficient has been closer to -0.70.

But, the observation that highly engaged reviewers learn more fits neatly into three ideas:

- Engaged students succeed.

- Giving feedback teaches students something about writing they can’t learn from drafting.

- Writing more has value.

In fact, peer learning is a weekly event in my class precisely because it should cause learning. Giving feedback is one factor among many that contributes to learning in a peer review activity. Students learn from reading, listening to the follow-up discussions, and working through their own revisions. Among these, giving feedback is one of the most cognitively demanding because it asks reviewers to talk to writers about specific ways to transform the draft that is into the draft that could be. That’s the revision muscle practicing peer review should build. Giving feedback proves that revision muscle moved. It might not have moved much or well, but it moved.

Because of this complexity, the absence of reviewer labor is more predictive than its presence. Reviewers who aren’t setting a lot of revision goals for other writers are at-risk of doing too little to learn much. Writing half as many words as peers in comments is an early warning indicator that students won’t succeed. There’s no magic in this prediction. It is simply a matter of recognizing that time and effort (or not) have an impact on learning.

This data suggests that picking up the pace of writing during review is an important learning intervention. Alerting reviewers that they are off-pace in terms of word count isn’t an easy fix, as this data also shows. This course emphasized reviewer labor through explicit instruction, scaffolding, models, peer norms, data-driven self-assessments, low-stakes completion grades, and two high-stakes quality assessments. Even so, a third of the class struggled to write more words in feedback. Behind reviewer labor shortages, there are thorny motivational, skill, self-efficacy, and time management problems. Yet, students who decide to write a few more comments that are a little longer every week tend to find that it is worth it. For many students, writing better comments is their first success as writers.

Teaching (and motivating) students who are offering little feedback to comment more and say more per comment appears to be a necessary but insufficient condition for students at all levels of incoming skills to succeed. Writing more feedback won’t guarantee a higher-than-expected grade, but writing too little feedback is very likely to result in a lower-than-expected one.

Ethics Statement

The data in this post is part of a study of my own classroom. My institution declared my classroom research exempt because it’s only records of ordinary classwork.

The evaluation of student work is my own assessment, and it’s the one that affects students’ college trajectory. With funding and time, a more reliable assessment is possible. Those gold-standard research standards would not change students’ grades, however.

In my reflective practice, I align these quantitative trends with my knowledge of students’ lives and work from their literacy history surveys, journals, mid- and end-of-term reflection papers, in-class participation, and conferences; that information is not covered in the IRB exemption and is excluded from this report.