Eli Review exists to help instructors see learning while students give each other feedback and use it to revise. The analytics point out who is on-track and who is struggling. Individual student reports already provide those details. Today, we’re releasing an update that helps instructors see how all writers are doing at a glance.

We’re calling this new option “tallied responses.” When creating a review task, you can now choose to have Eli tally a score for each writer based on reviewer responses. These tallies help instructors quickly identify which writers need extra help to meet the criteria.

Tallies are completely optional and are only visible to instructors. Reviews without tallies will look just like reviews always have.

Creating Tallied Response Types

While creating a review task, instructors will see an option to tally trait identification sets, rating scales, and likert scales. Each set is tallied individually, allowing you to create reviews where only certain response types are tallied while others are not.

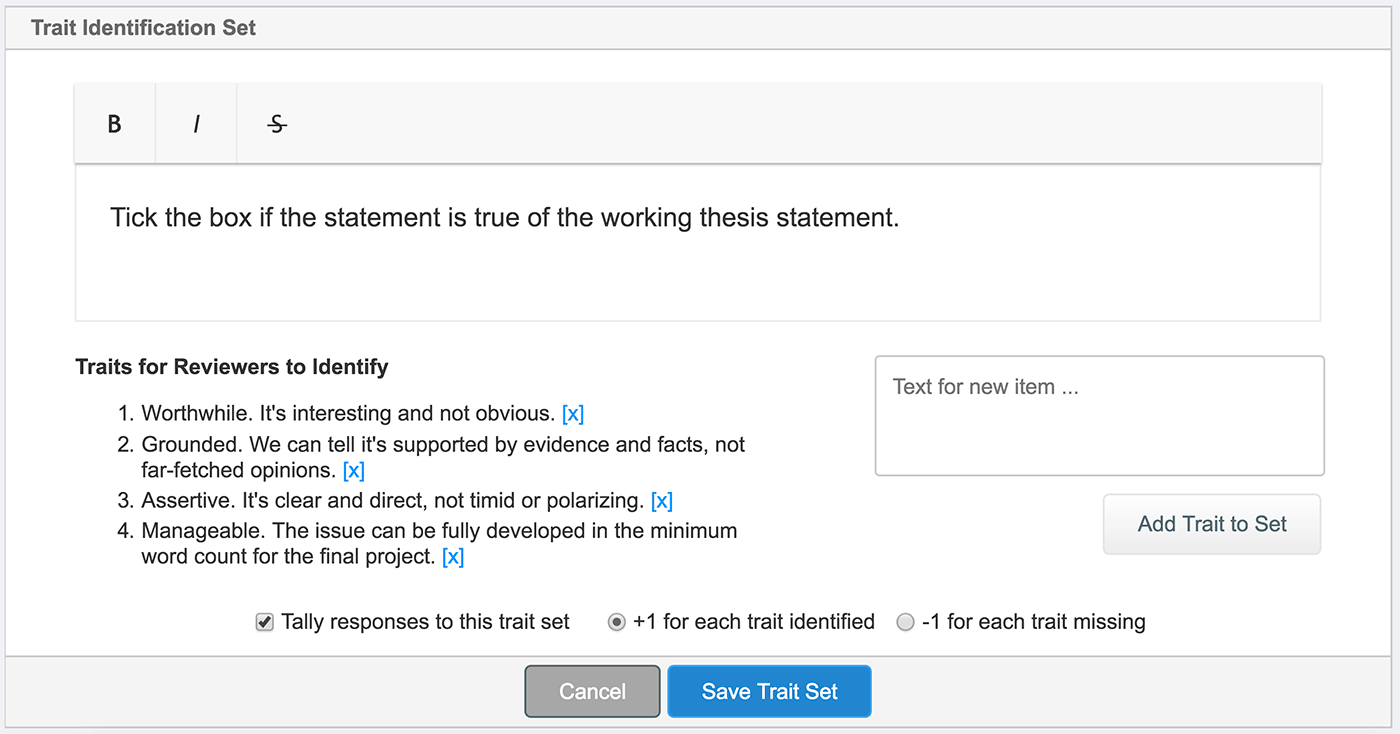

Trait Identification Sets

Tallying a trait identification set prompts you to indicate whether you want traits to have a positive or negative value. A positive value means that writers will be given one point for each trait identified by reviewers, and a negative value means that writers will lose one point for each trait identified. The valence applies to every trait in a set, so you want to be sure to put all of the “must have” criteria in a positive set and all of the “must not have” traits in a negative set.

| Positive Tally [“must-have traits”] | Negative Tally [“must-not-have traits”] |

|---|---|

Tick the box if the draft meets these minimum standards

|

Tick the box if the draft does NOT meet the minimum standards:

|

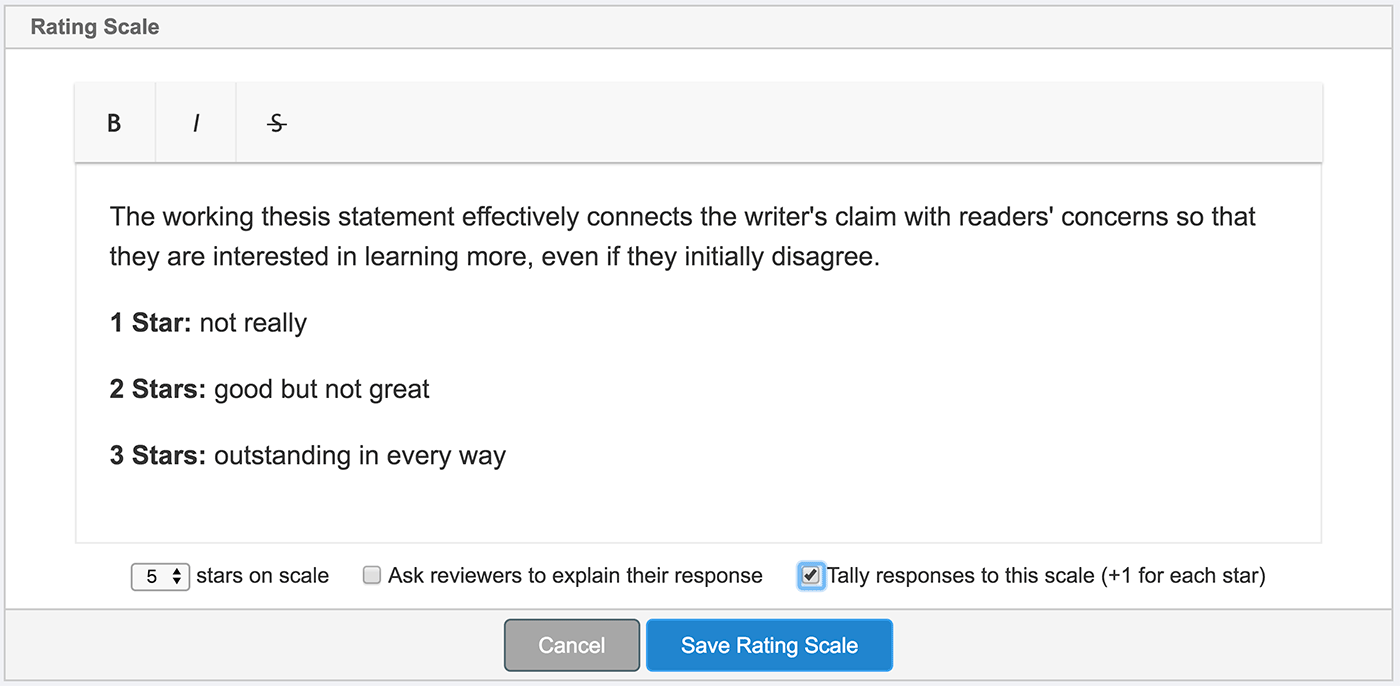

Rating Scales

Rating scales are tallied simply: each star given by a reviewer counts as one point. The more stars on the scale, the more points possible per review.

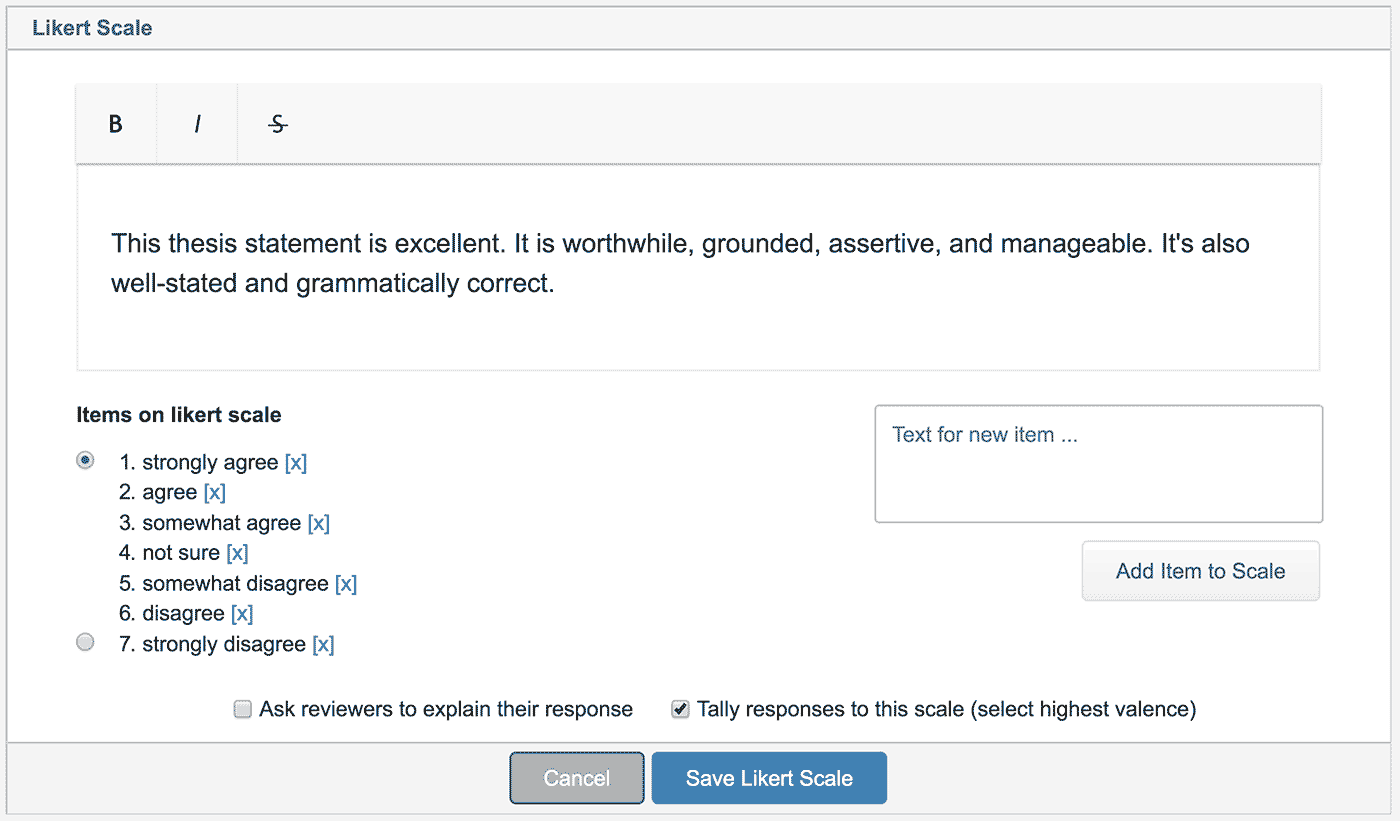

Likert Scales

Tallying a likert scale requires you to specify which end of the scale is high and which is low. Writers will receive one point if reviewers choose from the low end of the scale, or they’ll receive as many points as there are options if reviewers choose from the high end.

What Reviewers See

Nothing new: these new scoring features will not be visible to reviewers at all; students don’t have to do any additional work for tallied responses, and the review interface looks the same as it always has for them. Eli handles all of the response tracking and scoring on the back end.

Tallied Results: Understanding the Data

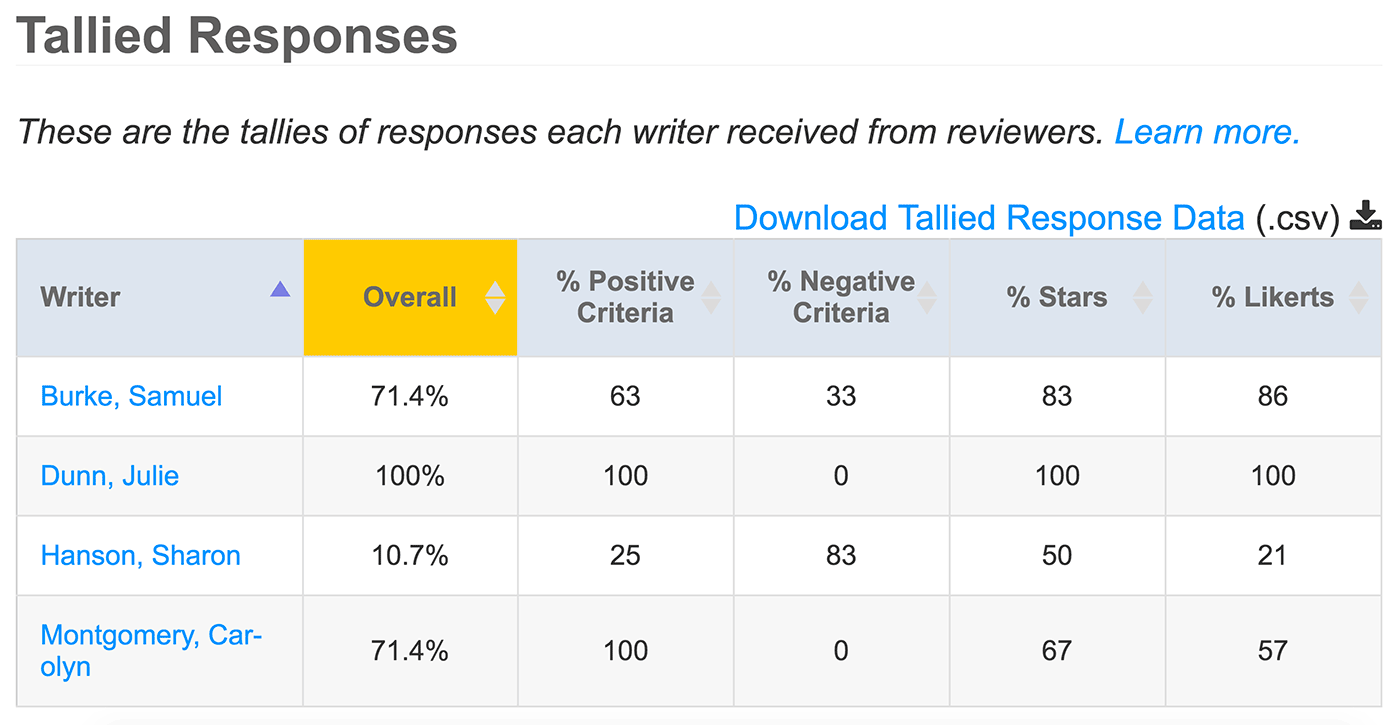

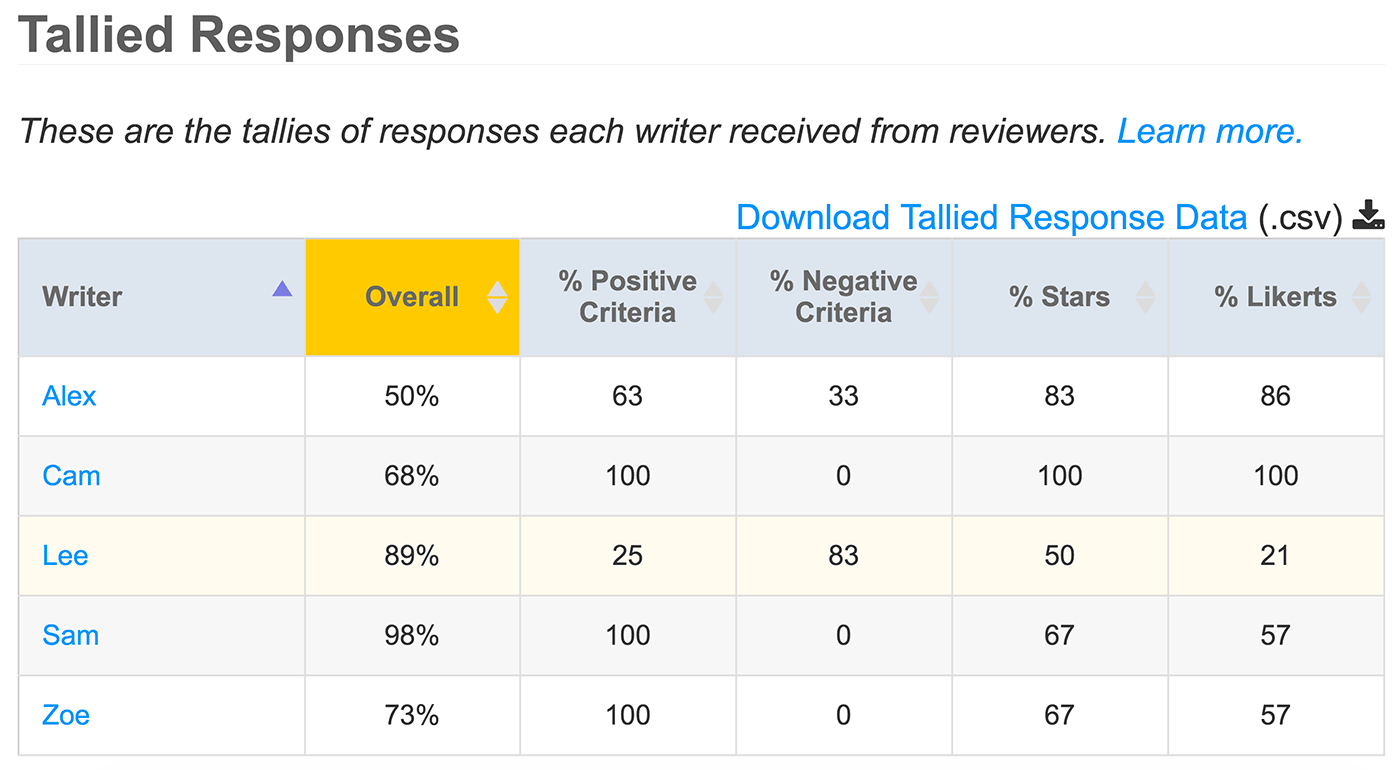

Once reviewers submit their feedback, Eli tallies that feedback and compares each writer’s earned points to the possible number of points. This tally is sensitive to group size, so writers in groups of 3 and those in groups of 4 have a different highest possible tally. A table summarizes earned tally/possible tally as percentages. Use the sorting features in the column headers to identify areas of concern.

This new table can be found under the “Writing Feedback” tab on a review report. The table will only be shown for reviews with at least one response type tallied; otherwise, Eli won’t make one. Likewise, the table will only have columns representing each type of tallied responses.

- % of Positive Criteria: the percentage of all positive criteria a writer received

- % of Negative Criteria: the percentage of all negative criteria a writer received

- % of Stars: the percentage of possible stars the writer received

- % of Likerts: the percentage of possible likert points the writer received

- % Overall: the percentage of points possible the writer received (positive criteria – negative criteria + stars + likert / points possible)

The table lists percentages per column for each student participating in the review. This new instructor analytic reveals reviewers’ feedback on all writers’ drafts from a single screen.

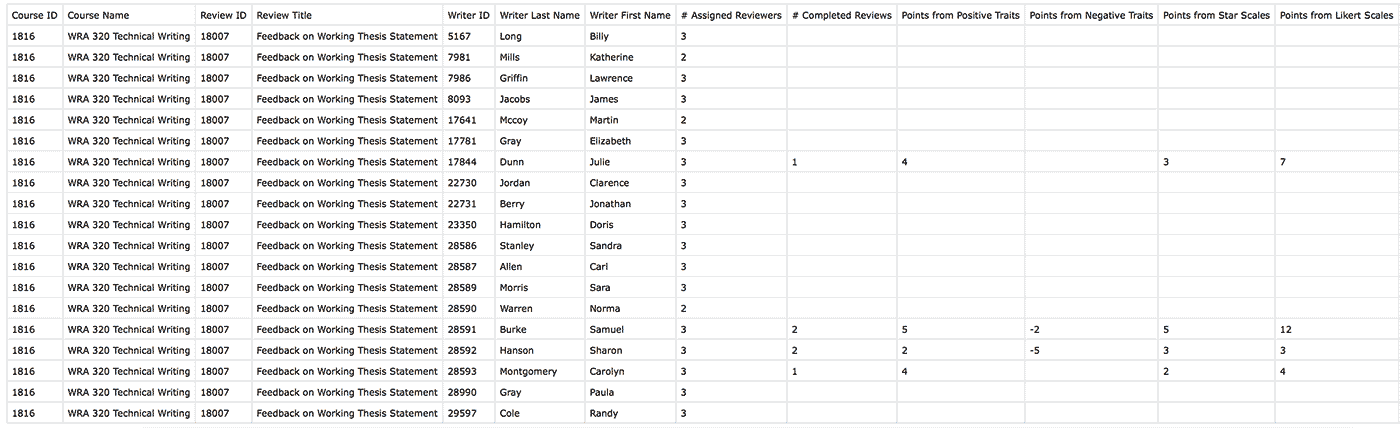

The table has a download option that will contain a more detailed breakdown of these tallies, including total points possible and points earned by each writer. See the screenshot below for a preview, or check out a sample in our example CSV download.

Tallied Responses Example

Tallied responses count up everything reviewers checked off and/or rated about writers’ drafts. Questions that focus reviewers’ attention on specific criteria so that they rate drafts carefully produce tallies that instructors can trust. As any survey designer knows, you have to design the questions for the data you want to see.

Using this Example

The preceding screenshots all describe the review we’ll discuss below. You can actually find this review in Eli’s curriculum repository by following these steps:

- Log in to Eli and select your course

- Start a new review task

- Click “Load Review Task from Task Library”

- Search the repository for Feedback on Working Thesis Statement

- Click the load button and all of this review’s settings will be saved into a new review

or you can click the button below to load it into Eli right now:

Review Questions

A working thesis is an attempt to articulate the main point of a project. It’s tentative in all the best ways. A peer learning activity should help writers push their thesis a littler further toward the criteria of effective, polished thesis statements.

Here’s a review that guides reviewers using checklists and ratings to read carefully and respond throughtfully to a working thesis statement:

Tally of the Review Questions

The working thesis review includes:

- A trait identification checklist requiring reviewers to indicate if the working thesis is worthwhile, grounded, assertive, and manageable. (4 possible points)

- A rating scale requiring reviewers to indicate how well the working thesis anticipates readers’ concerns. (3 possible points)

- A Likert scale requiring reviewers to indicate its overall effectiveness. (7 possible points)

The ideal tally for these questions is 14 from each reviewer.

Tallied Response Report

The tallied response report for this activity shows how well reviewers thought writers’ working thesis achieved the criteria. Each student’s percentage of trait id checklists, star ratings, and Likert ratings are shown. Although some students got feedback from two peers and some from three, the percentage accounts for group size.

In the Overall column of the table, the percentage shows the range of quality in the working thesis statements. It’s clear that Alex (50%) is going to need extra help to revise.

Not An Appropriate Peer Grade

It’s not clear, however, that there’s a big difference in the quality of Cam’s draft (68%) and Zoe’s (73%). There’s only 1 peer vote separating Cam (68%) from 71%. As an assessment instrument, this review isn’t sensitive enough for 1 peer vote to determine a C or D. It’s not a good grading practice.

If the comparison between Cam and Zoe’s percentage isn’t convincing enough, there are two more reasons not to treat the overall percentage as a grade.

First, a peer review of a working thesis is designed to help students revise. A bad grade punishes them for needing to revise. Alex (50%) actually benefits most from this activity because the revision steps are clear and many. Sam (98%) benefits least from this activity because the draft meets/exceeds the basics.

Second, the questions were set-up to guide reviewers in applying the criteria to the draft. That’s different from setting up the questions to grade the draft.

If I wanted reviewers to grade a draft, I’d make sure the points available for checklists and ratings were more balanced. In this review, checklists are only 4 points, and ratings are 10 points. The checklists are the strongest indicators of draft quality; to get a grade that reflects that priority, I’d weight checklists higher than 1 point.

The tally doesn’t have weights, but I could do that math with the downloaded csv file. That’s extra work for me because I’m converting formative peer feedback into a grade of draft quality, turning a square peg into a round hole.

This particular review shows that counting up what criteria reviewers think writers have met can be much more valuable than a grade: Tallied responses can help instructors know who needs their help.