At CCCC@SJSU “Making Spaces for Diverse Writing Practice,” four San Francisco State University instructors—Joan Wong, John Holland, Martha Rusk, and Evan Kaiser—and I led a workshop entitled, “Workshop 5: “We’re No Longer Wasting Our Class Time!”: A Hands-On Workshop for Better Online Peer Learning.”

This workshop synthesized the conversations we had most Fridays during the 2016-2017 academic year. It also is our best explanation for truly stunning student satisfaction data.

Preface: How We Know We’re Doing Something Right

Since Spring 2015, a group of instructors at San Francisco State University have been using Eli Review to facilitate peer learning. Whether in traditional F2F 1st/2nd year writing classes or in the redesigned hybrid course for 2nd year writing at SFSU, these instructors have found that Eli Review compliments the writing process. And according to the end-of-the-semester surveys for an IRB-approved study, their students agree.

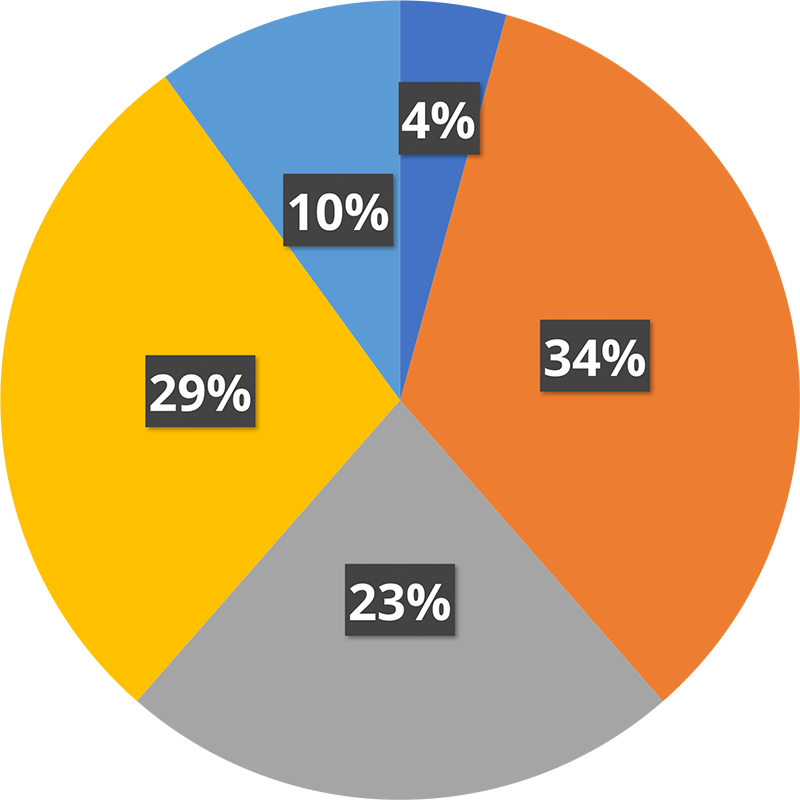

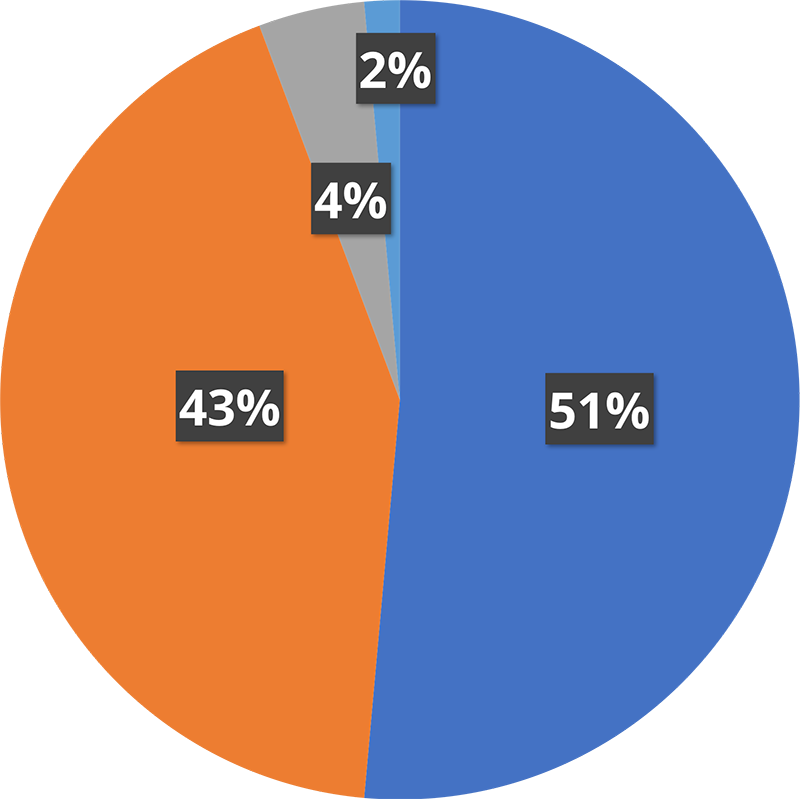

In Fall 2016, only 39% of 70 students reported that their experiences with peer learning prior to the course were very or somewhat useful; a whopping 94% of them found peer learning very or somewhat useful after using Eli Review.

| Past Experiences with Peer Learning | Experience with Peer Learning in Eli Review |

|---|---|

|

|

|

|

Every semester the numbers are the same. The numbers are the same across instructors too. They’re also the same across F2F and hybrid courses. Our weekly conversations about peer learning pedagogy around feedback and revision have created instructional consistency that has lead to measurable gains in student satisfaction.

In addition, SFSU instructors are pleased that Eli Review reinforces the writing process in their classrooms by extending revision and feedback, steps usually addressed superficially.

That’s an amazing change, especially when you learn more about the history of peer learning from the two experienced instructors on the panel, Joan and John.

Part 1. How We Decided Online Peer Learning Was Worth The Time

When she taught 5th graders, students begged Joan Wong for peer feedback. When she started teaching 1st & 2nd year writing in college, peer review sucked all the energy out of the room. A few strong students would give great feedback, but most would not.

Joan framed this disparity as the same problem at the root of Keith E. Stanovich’s observation:

“In reading, the rich get richer and the poor get poorer.”

Without a scaffold and pedagogy focused on improving feedback, good writers worked harder at being better reviewers, and everyone else didn’t.

Students are aware of this disparity. They might not invest time in giving good feedback because they know they won’t get it back. If they don’t learn to give good feedback, the gap between the “rich” and the “poor” is unlikely to narrow.

Frustrated by the outcomes for learning and classroom dynamics, Joan dropped peer learning six years ago in favor of more instructor conferences. She would still be doing a lot of heavy lifting in many, many hours of conferences if she’d not observed John Holland’s class using Eli Review.

Joan discovered, to her surprise, that Eli Review reflects her pedagogy, allowing her to assess student drafts and to adjust her lesson plans to meet student needs. More importantly, Joan found that Eli Review provides students with an opportunity to internalize the value of reading from two perspectives: as a reader and as a writer.

What is Eli Review?

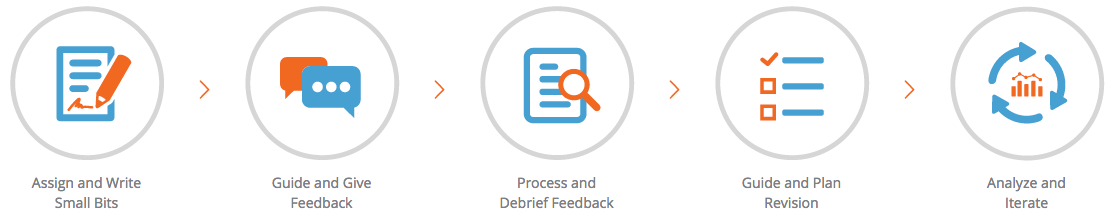

Eli Review is a scaffold for feedback and revision. Everything that happens in traditional peer review happens in Eli too. Students write; and they answer questions designed by the instructor in order to give feedback to peers. But, Eli’s app coordinates and reports peer activity so that instructors can see trends in what reviewers are saying to writers and about what writers need to revise.

To traditional peer learning, Eli Review adds features that allow instructors to endorse comments and writers to rate how helpful feedback was to them. These features create a reputation system so that instructors can tell at glance if peer learning is meeting writers’ needs.

Eli also adds revision plans. Writers build plans by selecting peer feedback, prioritizing it, and then explaining how they’ll use that feedback to revise. The revision plan shows students’ thinking about the problems and solutions they need to address in their next draft. Instructors respond in order to coach the thinking writers are doing before they revise.

Eli makes it easy for students to go through the motions of a good writing writing process: write, give feedback, get feedback, and use feedback to revise. Teachers unlock the power of a good writing process by coaching students just-in-time using Eli’s analytics.

Part 2. How to Raise Students’ Expectations for Peer Learning

Because students bring so many mixed experiences to peer review, instructors have to help students expect the best from themselves and others. That requires explicit instruction in how to give helpful feedback. For peer review to work well, the least confident students need know how to contribute; or, to follow Stanovich, the poor have to know how to get richer.

Closing the gap between strong and weak reviewers was something John Holland wrote down as a problem to solve in his teaching journal at the end of his courses for two years running. But, he couldn’t do it until he started working with Eli Review in Spring 2015. When the program redesigned ENG 214 as a hybrid course, John panicked about peer review, not knowing how he could possibly find the time to run feedback groups with half the time available. He already sensed that peer feedback wasted time in class. In a hybrid course, he didn’t have the time to waste. What to do? His WPA Jennifer Trainor directed him to Eli Review because she thought “Eli’s pedagogy looked sound.” John started a conversation with the Eli team as his new course got underway, and we’ve been talking ever since, bringing more of his colleagues into the group each term.

In the CCCC@SJSU workshop, John shared the strategies for teaching students to offer helpful feedback that Eli has helped him accomplish:

- Explain to students that their future career as leaders and managers depends on learning to give helpful feedback.

- Stop assuming one class day is enough instruction on how to be an effective reviewer and adopt a weekly peer review routine.

- Teach students Bill Hart-Davidson’s pattern for composing a helpful comment: “Describe-Evaluate-Suggest.”

- Empower students to ask for more helpful feedback by being “stingy with the stars” in helpfulness ratings, a task students complete while John calls roll.

- Endorse comments rarely, but celebrate those high-quality comments by showing and talking about them in class too.

These commitments set students up for success in the very hard task of telling another novice writer how to improve. When students know what to do and get rewarded for doing it well, they work harder to be helpful reviewers. John doesn’t grade students based on their helpfulness ratings or on the number of blue thumbs up students get when he endorses their comments. [Students do get graded on their overall effort in peer learning, however.] These digital badges and his in-class shout-outs help motivate students week-to-week.

Part 3. How to Check for Understanding in Peer Learning

Getting students on track to be helpful reviewers is not only about teaching them how to offer helpful comments but also about guiding them on what criteria to discuss. In Eli, instructors guide what students talk about by designing review tasks.

Ask Clear Questions about the Most Important Criteria

Review tasks in Eli are like surveys. When tightly focused and well-phrased, surveys can illuminate what participants are thinking. So too can review tasks. Again, “describe-evaluate-suggest” can help instructors design review tasks to pace how reviewers read drafts and what they say to writers. The table below shows how the different response types in Eli’s revision plans can be used to help reviewers give better feedback:

| Describe | Trait Identification Checklists

|

| Evaluate | Star Ratings

Likert Ratings

|

| Suggest | Contextual Comments

Final Comments

|

Use Peer Review Results to Emphasize Revision Goals

Because the review task questions are designed like a survey, they generate data like a survey. For example, the trait identification checklist from Evan Kaiser’s class shown below helps him know that only 27% of the drafts read by reviewers included a publication date in parenthesis for APA style citations. That’s a trend students can likely correct now that they know the problem. The more concerning pattern, though, is that only 59% of the drafts read by reviewers include a clear summary or paraphrase of a source, and that’s the essential criteria for this assignment.

Eli gives Evan real-time information about student performance that would otherwise take two weeks to notice. Now he can plan a mini-lesson about clear summaries for the next class session.

Help Students Understand What Makes Strong Drafts Effective

He can also model a clear summary from a draft reviewers thought was very successful. Because Evan included a star rating question, Eli shows him the top three drafts rated by review groups as being an exemplar. Evan set this review to be anonymous, and, even without that anonymity setting, he can share these exemplars from his instructor view without revealing names.

By clicking the link for Classmate #18, Evan can locate the writer’s draft, project it, and have students talk through why they agree (or disagree) with the reviewers who nominated it as an exemplar. These kinds of modeling conversations help students better understand the criteria that Evan wants to see in their writing. By discussing successful and unsuccessful writing, students get better at close reading drafts, including their own.

Expect Students To Use Feedback to Revise

Better feedback and a better understanding of exemplars only lead to better writing if students use the feedback to revise. In Eli’s revision plan, writers can reflect on specific peer feedback they’ve received as well as make notes about other revision goals they’ve made based on reading others’ drafts and listening in class. This holds students accountable for all the feedback being exchanged in the class, not simply the feedback they personally received.

Martha Rusk explained how she uses revision plans to provide individualized instruction. She explained that she tries to lean out before leaning in. When peer review and debriefing have been effective, most students can identify the two or three top priorities for revision. She looks first at the student’s work holistically and asks, “How much do I actually need to add?” Students can’t handle more those 2-3 goals before submitting a new draft next week. It saves her time to say, “You’ve picked the right goals; proceed!” If students are off-track, she can write a more extensive comment to writers in order to better coach the thinking they are doing between drafts. By reinforcing peer feedback rather than overwriting it with her own feedback, Martha helps students learn to trust their peers and themselves to make effective revision decisions.

Part 4. How to Assess Engagement in Peer Learning

Eli scaffolds students’ work as writers and reviewers, making it easier for students to go through all the motions. Helpfulness ratings, endorsements, exemplar discussions, and shoutouts for highly-rated comments can motivate students to work hard in peer learning. But, nothing quite motivates like grades.

John Holland developed a weekly engagement rubric based from a model suggested by Scott Warnock in Teaching Writing Online and adapted to Eli Review in conversation with one of Eli’s co-inventors Mike McLeod. Joan offers this summary:

- 10 points – Highly Rated Describe – Evaluate – Suggest & Several Endorsed Comments

- 9 points – Detailed Describe – Evaluate – Suggest Comments

- 8 points – Required Describe – Evaluate – Suggest Comments

- 7 points or Below:

- Missing Parts of Describe – Evaluate – Suggest

- Grammatically Unclear Feedback

- Late

- 0 points Hurtful, Aggressive, Offensive, Rude Comments or No Engagement

The full description of the engagement rubric is available. Also, this screencast explains how Eli’s analytics help instructors streamline their grading time. They look only for those students earning 9 or 10 or 0-7 points. They manually enter those scores in the LMS gradebook. Then, since most students meet the requirements, it’s easy to select the rest of the class and fill all those at once with 8 points.

By monitoring students’ engagement in the full write-review-revise process, instructors reward students for doing the heavy lifting that improves their writing. It also helps instructors notice who is not working hard enough to learn much very early in the semester. John reports that he’s able to pull struggling students into his office for a conference by week 2 or 3 with Eli; without Eli, it’d been week 5-6 before he’d even know who needed outreach.

Part 5: Where We Are in Our Thinking about Peer Learning Pedagogy

If you’re still reading, you can’t help but notice how differently these four teachers spend their time. They’re invested in making peer learning both routine and powerful. They design tasks in order to see if learning is happening, and they watch formative feedback analytics, not just facial expressions and final drafts. They teach a pattern for helpful comments, reinforcing that pattern weekly with their class time and participation points. They build in enough practice cycles so that even the weakest writers have time to improve.

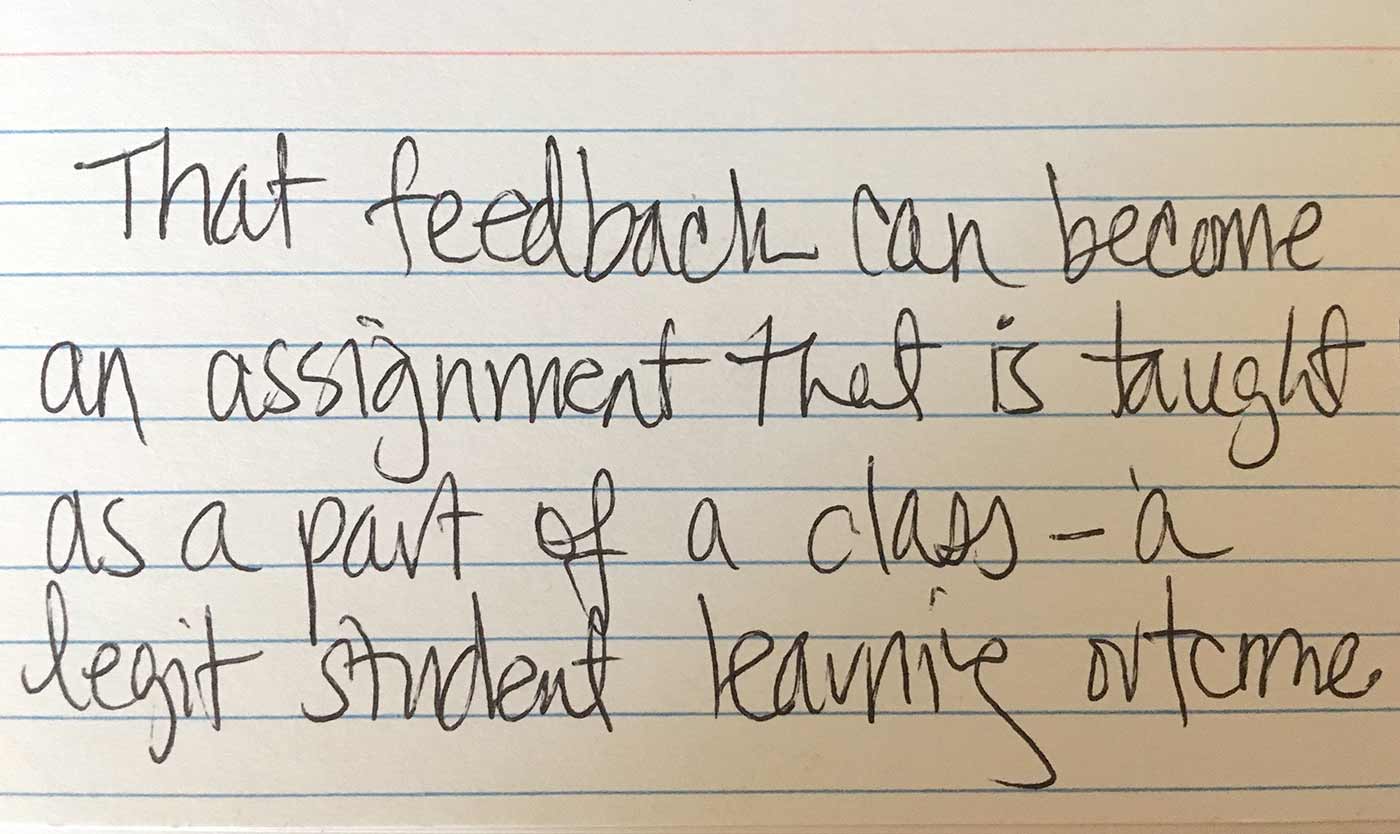

As a participant in the CCCC@SJSU workshop observed, the big idea is that better feedback is a legitimate learning outcome of a writing course.

These instructors are putting the feedback students GIVE and USE at the center of their writing courses, and it’s working to improve students satisfaction with peer learning. It’s also moving the needle on students’ improvements in the portfolios, especially in the quality of their reflections, but that’s a story for another day.

Be a Slow Radical

John offers this anecdote to explain how radically Eli has changed his approach to teaching writing:

I recall that the first time that I was observed for an evaluation as a new lecturer way back in the day, I was told not to choose a peer review day because “We need to see you actually teaching something.” That was my introduction to the value we in the field of composition place on peer learning.

A couple of years ago, my institution revised its procedures for evaluating lecturers. As we met together to discuss how evaluation and observations would be structured, once more we were told not to invite the designated observer on peer review day. We all know that not much teaching happens then. Does students’ sense that peer review is mostly useless emerge from their awareness that we aren’t actually teaching anything on peer review day?

I will be evaluated and observed again in two years. I intend to stress to my observer that in my classes every day is peer learning day and that if anyone wants to see me actually teaching, then they need to observe how I work to teach students how to give helpful feedback to classmates and how to get feedback that will inform a substantial revision plan.

John, Joan, Martha, and Evan have all figured out how to use Eli to help their students do the work real writers do: share their drafts, give feedback, get feedback, plan revision, make global and local changes, and repeat. They’ve changed the rhythm of feedback and revision that writers experience, and doing the write-review-revise cycle routinely is far more compelling to them than hearing about it.

Be A Slow User of Features

Even though it’s clear that all of these instructors are using Eli most days of the week, Evan cautions new instructors that they don’t have to use all the features at once. In his first semester, he focused on features that enhanced weekly f2f teaching:

- Creating, linking, naming tasks so that the write-review-revise cycle’s purpose was clear to students

- Setting up review criteria that give useful formative assessments

- Using scaled responses to nominate peer exemplars

- Using trait identification to generate mini-lessons on what student’s didn’t “get”

- Setting up groups and managing late writers

Next semester, he’ll use more of the analytics for assigning engagement points and checking the pulse of peer learning activity.

Don’t be a Loner Radical

What we’ve learned together as a group is that we learn best as a group. It’s same principle we teach students. By sharing our teaching goals and struggles, we get feedback that helps us improve as teachers. Together, we’re figuring out how to make students’ writing, their feedback, and their revision plans take up the most space in our classrooms.

References

Stanovich, K. E. (1986). Matthew effects in reading: Some consequences of individual differences in the acquisition of literacy. Reading Research Quarterly, 21(4), 360–407.