This post is a part of the series Challenges and Opportunities in Peer Learning.

In the opening post for this series, we noted that unhelpful feedback is one of most common reasons instructors tell us they won’t commit to peer learning. In the face of bad peer feedback, instructors often choose to become the primary feedback provider. By working so closely with individual writers and their drafts, instructors put themselves in the middle of the noisiest stream of evidence about student learning (drafts) where it is harder to know how to coach students’ thinking. Instructors are also leery of unhelpful peer feedback because it can lead to writers’ disengagement and to reviewers’ lack of confidence.

In this way, unhelpful peer feedback is contagious. Prevention isn’t possible (because learning!). The solution is to stop bad feedback in its tracks. Instructors need to teach and reward helpful feedback. When teachers provide instruction, practice, and accountability around helpful feedback, they can make unhelpful feedback a short-term rather than chronic condition of peer learning.

Part 1: Do you want bad feedback to be a short-term or chronic condition?

Over the last thirty years, we’ve learned a lot about the value of helping students improve their writing not only by working on their drafts, but by working on how they talk—and think—about their writing in general. This work on the “metadiscourse” of writing has mostly focused on genres that should by now seem familiar to readers of this series (Hyland, 2005): comments made during reviews, revision plans, and student reflections on their writing.

In this post, we explain why improving metadiscourse is an important learning goal writing classes. Specifically, we explore why bad feedback between peers is still good feedback for instructors. Students who can’t talk to each other about their work are unlikely to be able to make strong revisions. Just-in-time coaching around unhelpful comments redirects students’ attention so that they put their focus on the revision priorities that matter most.

Design Challenge: Bad peer feedback happens.

Let’s be honest: Bad feedback happens in classrooms, workplaces, and even in scholarly publishing. Reviewers in all those contexts can be lazy, ignorant, or rude. But, they also have the potential to be thorough, insightful, and respectful. For instructors, the challenge is to figure out how to get less bad feedback and more helpful feedback in peer learning.

In a product-oriented classroom, writers are in the same position as scholars receiving an underwhelming peer-review: They have to grin and bear it, revising in spite of unhelpful feedback. Also, like scholars submitting their work, writers’ risk of receiving unhelpful feedback is no better after the fifth submission than it was on the first.

In a process-oriented, feedback-rich classroom, instructors can design peer learning to favor writers’ interests. By teaching reviewers to do a better job, they help writers get better feedback. Because of the instructor’s teaching priorities and strategies, the possibility of bad feedback declines over time. Instructors can promise better feedback. They can promise it because they’ll teach it.

Design Opportunity: Learn from Bad Feedback

Teaching students to give better feedback begins by learning what kinds of mistakes they are making in giving it. Seeing how students respond to others’ writing using criteria you’ve set in a review activity can provide valuable information about who is struggling to understand the goals for a writing task and who may be struggling with strategies for achieving those goals. There are several important things you can do when you design a review to help you see who is getting it and who needs extra help.

Strategy: Look for reviewers’ confusion.

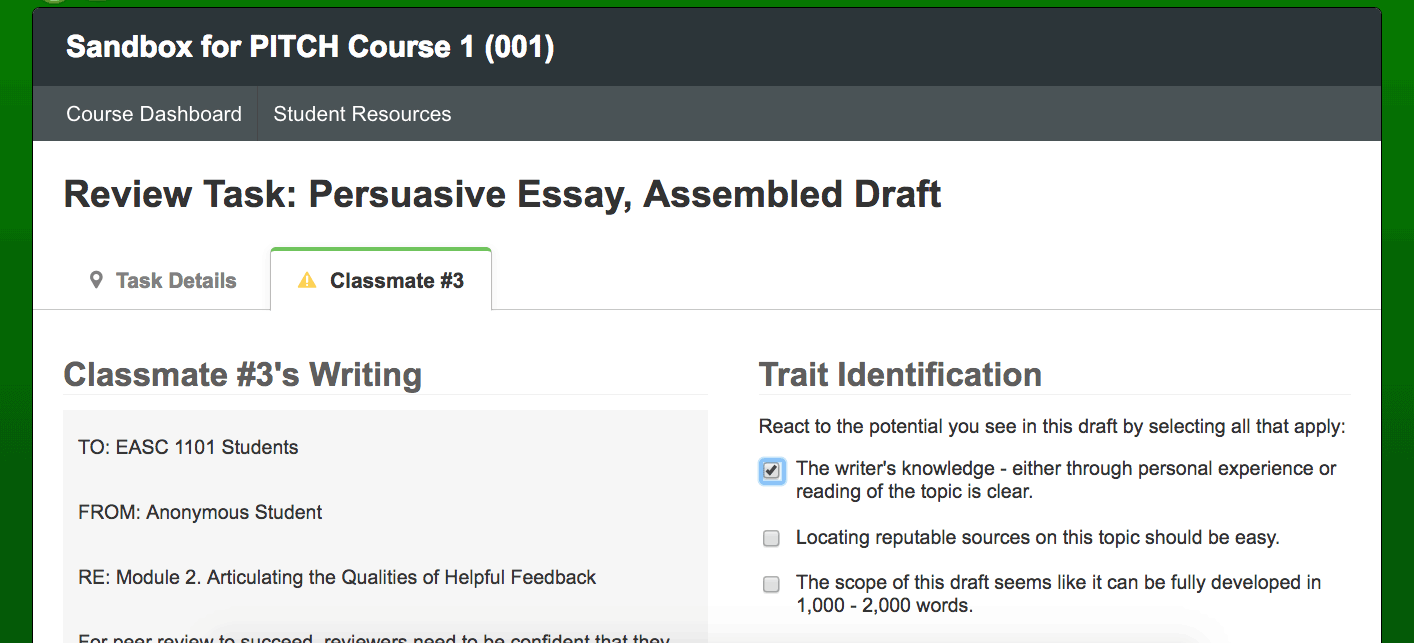

It’s easier for reviewers tell if a draft meets a high-level, complex goal if they’ve decided it meets smaller, discrete goals first. Simple “I see it” or “I don’t see it” checklists guide reviewers to look for specific criteria in drafts. In Eli, these checklists are called trait identifications.

For example, if you want to know if reviewers understand “synthesis,” a trait identification checklist might ask reviewers to tick the box if they think a draft does a good job

- stating the main point of a source

- connecting the source to other sources or ideas, and

- explaining why source matters to the project.

Then, check an individual writer’s Feedback Received report, which aggregates results from all reviewers. When you see 100%, you know that all reviewers agree the draft has that trait. Check the draft yourself. If you agree that the writer met the criteria, then you can be confident that reviewers were clear about the criteria.

When you see that a writer’s checklist has 33% or 25%, then one reviewer in the group disagrees with the rest. Check the draft yourself to figure out who’s right. Then, check more writers.

If there are a lot of reviewers who are out of synch with their groups on the criteria or out of synch with your assessment of drafts, adjust your lesson plan for the day; work on clearing up students’ confusion around whether a draft meets expectations or not. If only a few are muddled about it, have a conversation with the small group to clear things up.

Strategy: Weigh in on the drafts students think are excellent.

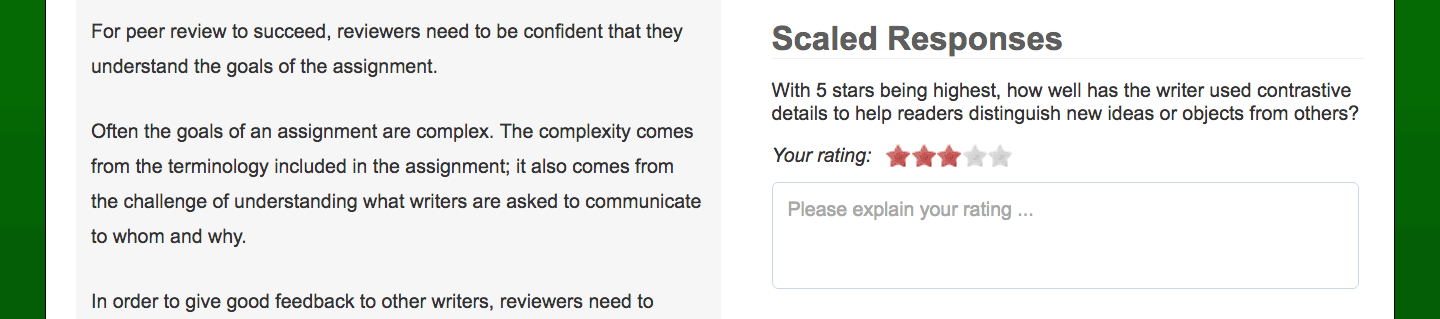

After they have worked through a checklist of traits, reviewers are better prepared to rate how well the draft accomplishes that high-level, complex goal.

In Eli, two kinds of ratings are available: Likert and star ratings. Star rating question invites reviewers to nominate peer exemplars, and we recommend this type of question so that it is easy to find the drafts reviewers think are excellent.

To follow up on the checklist above, reviewers might answer this star rating question:

This draft is effectively synthesizes all the sources.

1 star=Strongly Disagree 3 stars=Unsure 5 stars=Strongly Agree

The class data results for the instructor show the top three drafts based on reviewers’ ratings. Check these drafts to see if you agree that the highly-rated writers are exemplary.

- If they are, lead a discussion about the good examples and how the writers achieved their exemplary results.

- If you disagree, lead a discussion about where your perceptions differ from those of the reviewer and suggest ways for the group to course correct.

By explaining how you react to the drafts reviewers like, you help students give better feedback next time.

Strategy: Use contextual comments to see what reviewers can do.

How students talk with each other about their work—their metadiscourse—is essential for their learning. Use a targeted prompt for contextual comments that asks reviewers to show what they know.

The prompt could direct reviewers to highlight a passage and name a specific instance of a desired feature can be a really good way to see which students understand a tricky move they must do in their own drafts. For instance, you might ask students to highlight an example of a sentence containing a “warrant” and then explain what the claim and evidence are that the warrant makes a link between. Reviewers who can’t find the warrant or can’t articulate the link it makes are likely unable to include warrants in their own drafts.

If it sounds like this is a strategy to use the review as an early – and more focused – indicator of students’ learning, you are getting the picture. Here is an instance where students’ metadiscourse help you know what to expect and how to respond to their own writing to come. Working early with comments and survey responses in this way makes your downstream work as a teacher much more specific, personalized, and effective.

Design Opportunity: Teach helpful feedback.

Though unhelpful feedback may be valuable for the teacher, we can’t leave things there. Instructors need a range of preventative measures and interventions to reduce the frequency and intensity of bad feedback.

In this post and the next one, we are highlighting Eli’s features because Eli’s design allows writers, reviewers, and instructors to see, interact with, and export comments in a unique way.

Comments in Eli do more than give feedback to writers:

- Show All. All comments can be viewed from the writer’s perspective (feedback received) or from the reviewer’s perspective (feedback given). Having all comments a reviewer gave on a single screen gives students and instructors a way to see what a reviewer can say about other writers’ work. When students can talk about others’ work in ways that lead those other writers toward better revision, we know that they have developed new knowledge and skills that might help them revise their own. This at-a-glance list of comments given is powerful learning record.

- Helpfulness rating. Comments can be rated for helpfulness by writers, as explained in “You’ve Totally Got This.” That action creates a reputation system that instructors and reviewers can see. Top reviewers and top rated comments are highlighted in the “Review Feedback” tab so that instructors can quickly model from the comments writers valued.

- Endorsement. Comments can be endorsed by instructors, which shows up as a thumbs up. Writers, reviewers, and instructors can see that endorsement within a review, but instructors can also see a count of total endorsed comments per review in the Roster’s student analytics. This count, in conjunction with helpfulness ratings, can help instructors reward helpful feedback.

- Add to revision plan. Comments can be added by writers to a revision plan, where they can add notes that reflect on the changes they’ll make and perhaps seek feedback from instructors. Revision plans hold students accountable for using feedback and encourage students to practice metadiscourse, which improves their decision-making process.

- Download. Comments can be downloaded in a report that includes helpfulness ratings, endorsement status, revision plan status, and word count. That download makes skimming all comments within a review easy. It also allows instructors to use spreadsheet functionality to design additional reports such as the ones we offer as free Google template.

These tools help instructors see trends and models in the comments students exchange.

Bottom-line: Unless you teach helpful feedback, bad feedback is all you can expect.

Learning by doing involves mistakes. In peer learning, those mistakes include giving bad feedback. Teachers can learn from the mistakes students make in their metadiscourse. Learning from mistakes requires being able to see them and being able to see a better approach. Eli’s features give instructors tools for seeing comments, finding exemplars, and identifying weak examples too.

The payoff for teaching helpful feedback is huge. Instructors who invest the early weeks of the term in have a reasonable expectation that bad feedback will be a short term rather than chronic condition in their classes. This expectation helps students have more confidence in peer learning: over time, comments will improve.

In part 2, we offer 3 sets of activities that let instructors clear the path from bad feedback to helpful feedback. Because it takes so much practice for students to get better, we offer a dozen ways for instructors to put the focus on what students say to each other peer learning.