This post is a part of the series Challenges and Opportunities in Peer Learning.

This blog series addresses the challenges of peer learning as opportunities for designing more effective assignments. We’ve discussed the need for frequent revision, for thoughtful timing around expert feedback, and for distinguishing grading rubrics from learning scaffolds.

In part one of this post, we offered a MadLibs routine for developing the scaffold; here, in part two, we describe strategies for getting clear evidence that students are learning.

Part 2: Reducing the Noise

Our argument boils down to this claim: Effective peer learning assignments gather evidence about learning. Sure, they help students to learn from one another. But equally important is the way they provide formative feedback for instructors about what needs to be taught next and for students about what revisions they need to make. The goal of peer learning is to help students get on the right path before grading happens.

The gamechanger is multiple cycles of peer and instructor feedback. Single reviews assigned in the late stage of a writing process put immense pressure on students and the activity to improve learning. That one review sweeps all evidence of learning into a giant pile so that students and instructors have to wade deeply in order to to figure out revision priorities.

We are advocating for a different model. Assigning several write-review-revise cycles for a writing project frees instructors up to sequence the skills along learners’ journey. More feedback and revision lowers the pressure for everyone.

To explain how more deliberate practice lowers the pressure, here’s a screenshot from our company’s Slack from August 18, 2016 that acknowledges how Bill Hart-Davidson’s patient mentoring has helped me fully embrace rapid feedback cycles:

This conversation followed the third day of class at the rural community college where I teach. I ran a quick, paper-based activity where students had to surround a quote from the day’s reading with their own words and use appropriate citation. That’s a follow-up activity to direct instruction and modeling on Day 2 plus textbook chapter reading homework. Day 2 in my course has had the same focus in Fall 2015, Spring 2016, and Fall 2016. But, my expectation for what’s next has been radically transformed:

- In Fall 2015, I folded source use into a larger suite of goals. Day 2 was source use day; then I moved on, circling back in passing but never hard. At the end of the term, I did an analysis of why some students failed, and I found that the weakest papers could not connect quotes to their own claims. Day 2 on source use plus folding it in was clearly not enough.

- In Spring 2016, I vowed to get different results, and source use became the focus of Unit 1. I assigned the exact same peer review on source integration every week for four weeks straight. (The reading changed; the review stayed constant.) The results overall were much better. On the timed final exam mandated by the department, only a handful of students couldn’t surround a quote with their own thinking. A few more couldn’t cite accurately, but the attempt was visible.

- In Fall 2016, I’m still going repeat the four weeks of guided peer feedback on correct source integration. I realized that I also needed an introductory activity that was not focused on students doing it well. I needed an activity where students tried to integrate a quote and then worked with a partner to label what parts they included and what parts were missing. The point is the labels, not the execution. I lowered the bar and felt triumphant because everyone left the room on Day 3 knowing the name of the thing they couldn’t do yet. Next time, I’ll worry about the explanation that follows a quote because that’s easier than the lead in and less fussy than citation.

Because my course is built to include four weeks of deliberate practice during peer review plus a handful of in-class activities, everything is low stakes. It really isn’t a huge deal that only 10% of my class “got it” on Day 3 after lecture, model, and reading textbook chapter. I didn’t fail as a teacher on Day 2, and they didn’t fail as students.

Practicing the Right Skill at The Right Time

Integrating sources is a hard skill, especially for students who struggle with reading. A well-integrated quote requires comprehension skills, rhetorical skills, plus grammar and mechanics. It takes time. Peer learning activities focused on the parts teach the habits of mind that lead students to fluency as they write with sources. Not every skill deserves so much deliberate practice, and not every group of students need so many rounds. By gathering evidence throughout these practice rounds, I’m able to adjust course and provide the individual interventions accordingly. Still, on Day 3, we are on the path to learning the intellectual move that separates weak end-of-term papers from strong ones; we know what to call the parts when we see them in drafts now; and I know what to emphasize during the next class.

This anecdote illustrates the claims we made in part 1 of this article when we explained why a rubric describes the destination but not the learner’s journey. To help learners on the journey, instructors have to identify learning indicators and then respond to the evidence of student learning.

Looking back at the MadLib patterns for learning indicators that we laid out in Part One:

| Patterns | Key |

|---|---|

|

|

I decided to use that pattern for the Day 3 activity:

- When I see that students can label the parts of well-integrated quote, I know my students have learned what’s expected when they use sources.

- I see labeled parts best in a single paragraph focused on one quote.

- To see more labels of parts in a single paragraph focused on one quote, I need ask students to choose a quote, write a paragraph, and get a peer to label what is included and missing.

My hat tip to Bill is not only about how focused this activity is but also about my increased ability to ignore what isn’t relevant now. As we acknowledged in part 1, student work along the journey is always a noisy signal of learning. The informal writing on Day 3 in my class is rife with what Frey and Fisher call mistakes (things writers can fix on their own in time) and errors (things that need my intervention). Because the learning indicator that mattered to me was students’ ability to correctly label all the parts of well-integrated quote, I was able to

- isolate the signal–look only at whether all students labeled all parts;

- boost the signal–look at whether each part was included or missing;

- reduce the noise–not worry too much about whether the lead ins, signals, citations, and explanations were any good.

Once I decided what I needed students to learn on Day 3 that they could reasonably be expected to learn, I was able to design an activity that let me see if they were learning.

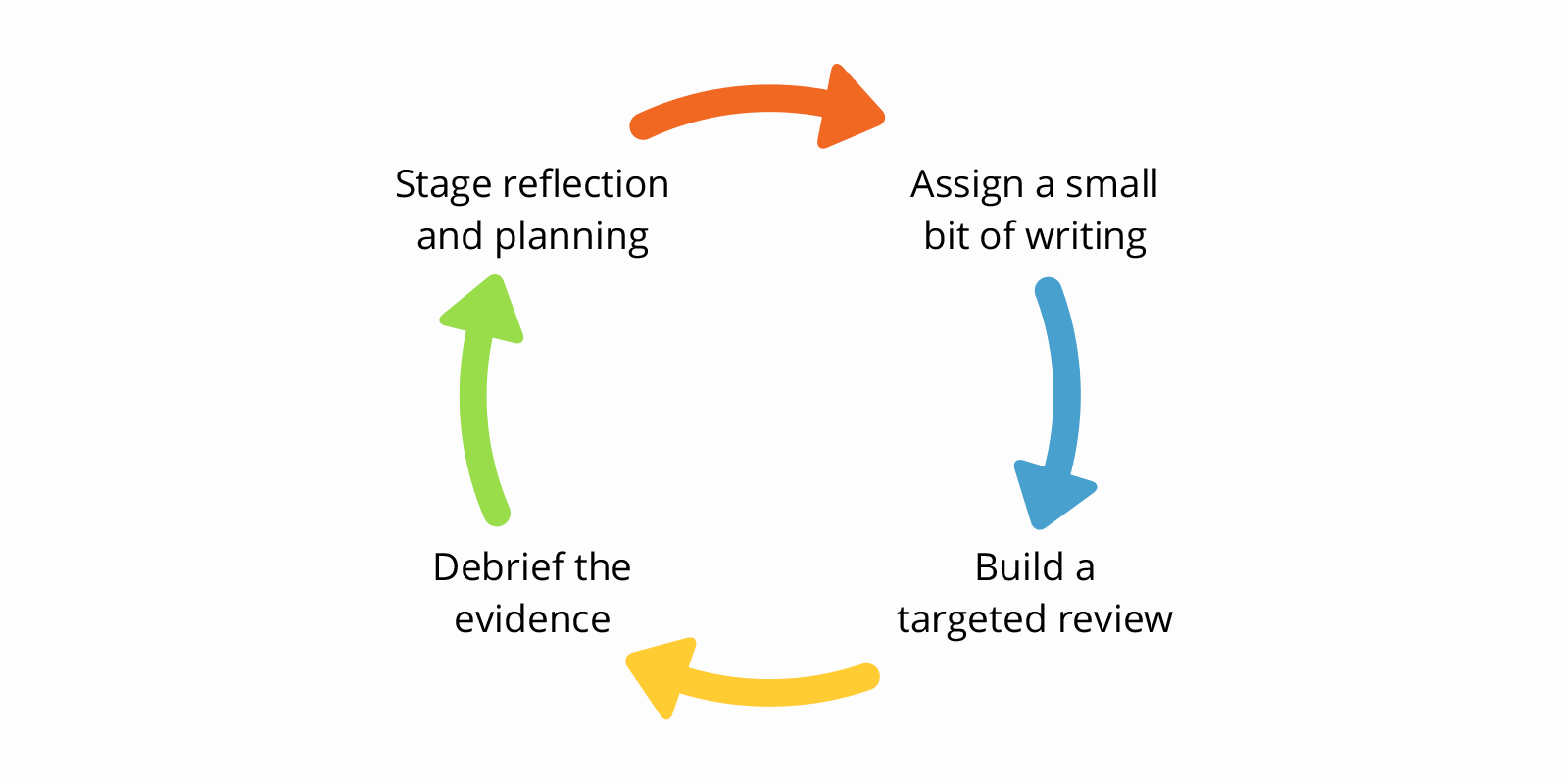

When instructors design activities to gather evidence that help them see learning, they take use four strategies:

Strategy 1: Assign a small bit of writing.

The first strategy of seeing learning is assigning the small bit of writing where it is easiest to see the learning indicator. It’s the least typing required to show the most learning.

Asking students to share a small bit of the “big” assignment solves three problems:

- Cognitive overload. Writing a few sentences takes little time, so students asked to revise that small bit are more likely to do so since they’ve not spent all night finishing it. Reviewers are also likely to be able to read and give helpful feedback to a small bit writing task without smoke coming out of their ears.

- Noisy Signals. The small bit has less noise in it, so it is more focused on the skill instructors want students to practice and thus easier for reviewers to detect.

- Flimsy Scaffold. Picking the small bit students need to write helps instructors optimally sequence the process.

The type of small bit writing task depends on the big project and the course. Generally, there is a decision in the big writing project that affects all the other decisions. Start the small bit there.

For example, in writing arguments, the claim affects all other parts of the text. The claim is first (and last) in the writing process. The table below suggests a just few of the small bit writing assignments for arguments and their relevant learning indicators.

| Small Bit | Learning Indicator |

|---|---|

| Working Claim | Is it arguable? |

| Grounds | Is it reasonable? |

| Qualifier | What are the limits of the argument? |

| Counterargument | What are the opposing points of view? |

| Rebuttal | Is there evidence or a logical flaw that undercuts the opposition? |

| Revised claim | Is the argument clearly stated and qualified appropriately? |

This small bit strategy focused on the parts of argument helps writers build toward a big project one step at a time.

A similar approach can work for the parts of a genre too. If students are writing business letters, they can start with introductions that connect with readers and then proceed through the body and closing.

When designing small bits based on parts of a genre, make sure that students are getting supported in the intellectual moves required for those parts too. For instance, a literature review is a part of an academic research article, but students might need multiple small bit writing assignments in order to locate sources, summarize each, then synthesize them in order to situate their own project.

Small bits should be quick to compose, quick to review, and quick to revise. Writing process pedagogy and Writing Across the Curriculum/Writing in the Disciplines all emphasize small bits, and many resources offer excellent examples. Here are a few of our favorites:

- John Bean’s Engaging Ideas offers a robust collection of small bits for formal writing projects (chapter 6) and particularly for undergraduate research projects (chapter 13).

- “Designing Writing Assignments” from Writing@CSU’s Writing Studio Open Educational Resource Project describes principles of assignment design and provides examples from several disciplines.

- “Quantitative Writing” from Carleton College’s Quantitative Inquiry, Reasoning, and Knowledge Initiative has writing assignments based on ill-structured problems using data sets.

- Wendy Laura Belcher’s How to Write Your Journal Article in 12 Weeks includes lots of invention and exploration activities that help humanities and social science writers work through their ideas.

Strategy 2: Build a targeted review.

A review should help the criteria come into focus for students. The review guides students in reading the small bit according to criteria, rating how well it meets those expectations, and suggesting improvements. Developing a shared understanding of the criteria and the levels of performance takes time. The process begins with response prompts. An effective review tightly aligns response prompts with learning outcomes and asks for reviewers’ attention in the right way.

Consider a task that prompts reviewers to give feedback on writers’ ideas and their organization. Ideas and organization are highly related, but consider how different this feedback is:

- Your argument ignores an important point, and you should do more research to address this perspective.

- At the end, you’ve clearly stated your main point. Try taking that part and moving it to the top, then rework the transitions to flow from it.

A writer getting both comments at once will struggle to know how to follow good advice. The second comment suggests that the problem with the argument might be solved through rearrangement, but the first suggests that it needs to be revised to include additional perspectives. The feedback here is noisy. The writer has to figure out whether to rearrange or revise after more research or both.

That struggle is valuable, but it might not be productive. Deciding to do the easiest thing (rearrange) or the best thing (revise after research) requires a high cognitive investment—one not all students are willing to make. In other words, a noisy review makes it hard for writers to know what to do next.

Noisy reviews also make it harder for instructors to see learning. If the writer chose only to rearrange, did she unwisely miss the opportunity to revise or was that choice a satisfactory solution? There’s no easy way to know because the review invited too many kinds of next steps.

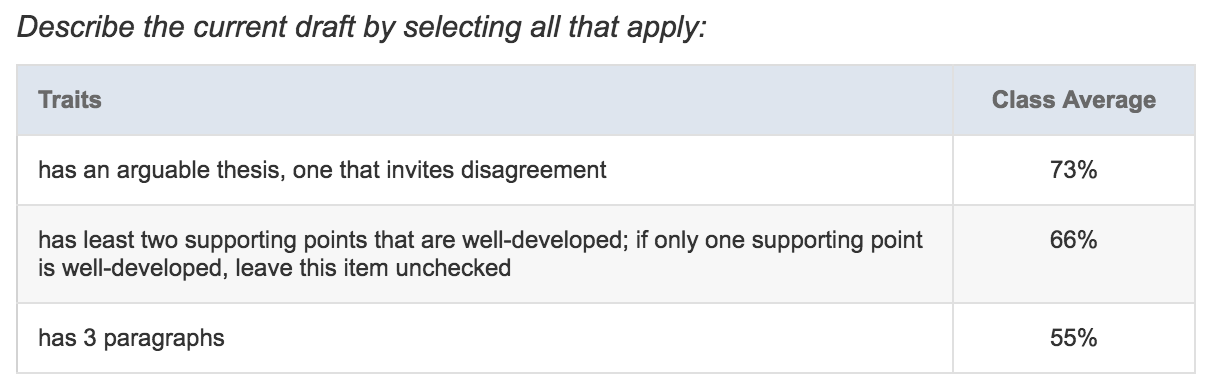

Unlike the noisy review described above, targeted reviews do a couple of things well simultaneously:

- Guide novice reviewers in reading the small bit of writing according to criteria

- Generate data instructors and students can use to see learning

- Generate feedback students can use to make a revision plan

In Eli, review design is survey design. Instructors get various ways to phrase questions so reviewers get guidance, and everyone gets results. Trait identification checklists, for instance, let instructors specify what features drafts should have, so reviewers make simple “yes/no” decisions, which show up as individual and class trends.

Designing a good review then is not only about finding the criteria that will best reveal learning indicators but also about asking leading questions so that reviewers give relevant feedback that lets instructors and students know if learning is happening. The task itself can reduce the noise in the activity.

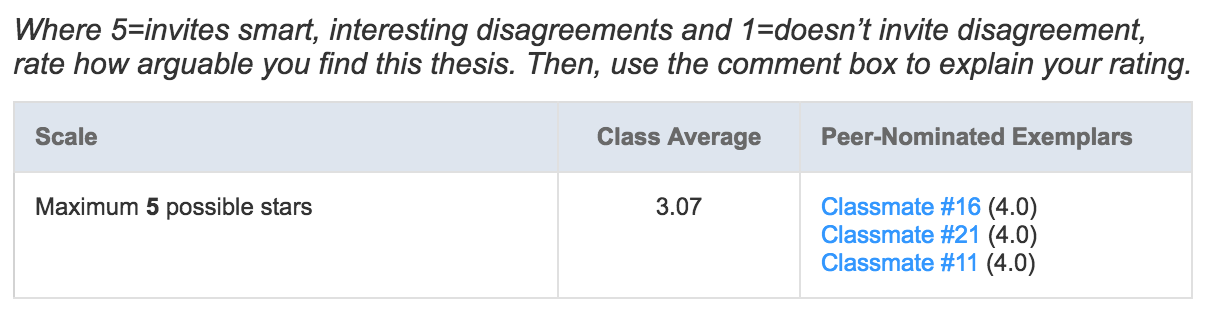

Another way to reduce the noise in reviewers’ feedback is to ask about the same thing in two different ways. To follow up on a “yes/no” checklist, instructors can add rating response prompt and invite reviewers to explain their reactions. Together, the checklist item, rating, and comment triangulate whether the draft actually has an arguable thesis or not.

Asking the same question twice helps reviewers be careful in how they give feedback. It also lets instructors know if reviewers are applying the criteria well. If reviewers check off all the traits on the list and rate it highly but their explanations are fishy, then instructors know that the reviewer is struggling to give accurate, helpful feedback. By considering how reviewers’ quantitative and qualitative feedback aligns, instructors can gauge where reviewers are applying criteria well.

Instructors can also help reviewers keep the criteria in focus by including tips and reminders in the response prompts, especially for comment prompts. Giving feedback is cognitively demanding. Reduce that burden by putting all the information that students need in the task itself.

This comment prompt writes out all the steps of describe-evaluate-suggest, a pattern for helpful comments. Being explicit in the prompt means students don’t have to remember or go look it up. That helps reviewers stay on-task.

How could the writer improve? Highlight a passage, then add comment. In each comment, do these 3 things:

- describe what the writer is saying,

- evaluate it according to the criteria above, and

- suggest a question or a strategy the writer should consider next.

Make AT LEAST 2 comments per draft.

In the review task design, instructors try to make sure writers get feedback they can use to revise. Through the questions they ask, instructors direct reviewers’ attention and influence how reviewers comment to be sure that writers get feedback that is relevant, timely, and helpful. When the task helps reviewers give better feedback, instructors can expect more out of writers’ revisions.

Strategy 3: Debrief the Evidence.

A targeted review of a small bit of writing will produce a great deal of data. The data can be an especially strong signal of learning if instructors have been careful to reduce the noise in writers’ drafts and in reviewers’ feedback with careful task design. The opportunity here is to become an evidence-based instructor who is adjusting lesson plans in response to real-time feedback about student learning.

Our team at Eli puts emphasis on debriefing because, as Dylan Williams explains in his ASCD article “The Secret of Effective Feedback”: “The only important thing about feedback is what students do with it.” In debriefing, our goal is to say something about the trends in the peer learning activity that motivates students to do something with their feedback. The table below shows how the different response types used in a review task lead to an instructor-led discussion of how students can use the data to plan revision.

| Analytics | Teacher Priorities for Debriefing | Student Priorities for Revision |

|---|---|---|

| Checklists |

|

|

| Star Ratings |

|

|

| Likert Ratings |

|

|

| Comments

(Contextual and Final) |

|

|

In debriefing, instructors say aloud what the trends tell them about learning. They debrief to help students look around at others’ work and realize what they need to change. In the next blog post, we’ll delve more deeply into the strategies for debriefing with writers on doing better revision and with reviewers on offering better feedback.

Strategy 4: Stage revision planning and reflection.

Reducing the noise with a small bit of writing, a targeted review, and an intentional debriefing of the evidence sets students up revise effectively. Instructors can increase that chance by asking writers to make a revision plan.

In Eli, a revision plan is a separate task. It gives students something to do with their feedback in a review task before they try to execute those goals in their revised drafts. In the feedback report they receive from peers, writers can “add to revision plan” any helpful comment. A copy of that comment is moved from the review task to the revision plan task. In the revision plan, writers can prioritize comments and add notes explaining their goals. (Our student resources explain the approach and the steps.)

Revision plans provide a record of how students are using the feedback they’ve received. They provide a new class of learning indicators:

- Can students recognize helpful feedback?

- Can they put it priority order?

- Can they explain how they’ll follow the advice?

Students’ prioritized list of comments and reflections on how those comments will influence revision give instructors a sense of whether writers are making decisions that will lead them down the right path.

Technically speaking, writers can only add comments received from peers. That’s only a slice of the feedback writers have encountered in the peer learning activity, however. In the revision plan note space, writers can add what they’ve learned from

- reading peers’ drafts

- hearing models discussed in class

- giving feedback to peers

- hearing the most helpful comments discussed in class

- hearing the instructor debrief checklists and ratings in class

- participating in other class activities

Revision plans reveal the thinking students are doing between drafts. It’s a snapshot of metadiscourse or the thinking-about-thinking writers are doing. Students’ metadiscourse should be informed by everything that’s happening in a feedback-rich classroom. It’s a small bit of typing that lets instructors know how aware students are of all the feedback available to them, not just the peer feedback they received personally.

Instructors can use revision plans to find evidence that students are tuned into feedback and listening to coaching. They can be confident writers are learning when students use language from the review task in their reflection, mention a strategy covered during debriefing, and prioritize helpful feedback from peers.

In Eli, the revision plan is also where instructors can intervene as needed to provide expert feedback. The goal is to coach the thinking students are doing between drafts. There are four basic moves instructors can take in revision plans:

- Go forth. You prioritized well, so keep going.

- Apply the advice you gave to your peers in your own draft.

- Rethink following the peer feedback that recommended _____.

- None of your peers let you know that you also need to _____.

As we discussed in a previous post, instructor feedback is a short lever. It’s time-intensive and thus high-cost. By keeping the draft, review, and revision plan focused on a single learning indicator and by limiting feedback to the four moves above, instructors can keep their workload manageable. More importantly, they can be confident that their expert feedback is timely and relevant to the skill that leads to student learning.

Bottom-line: Instructors bite off more than students can chew.

If these four strategies for reducing the noise in a peer learning activity around a single learning indicator seem overwhelming, guess how overwhelming typical peer learning activities are?

There’s rich evidence of learning in drafts, comments, and revision plans. But, those are all noisy signals, despite instructors’ best intentions as designers.

A really good outcome of formative feedback is realizing that the tasks were too ambitious: too much drafting, too many criteria, too little attention to overall trends, too vague metadiscourse. What you can see, you can teach.

Getting in tune with the evidence of learning in drafts, comments, and revision plans helps instructors spend more time where students need help. When instructors plan for multiple write-review-revise cycles, there’s a next round of learning.

Read Part 1 of Your Rubric Asks Too Much, Are learners on the right path?

References

- Bean, John C. 2011. Engaging Ideas: The Professor’s Guide to Integrating Writing, Critical Thinking, and Active Learning in the Classroom. 2nd ed. The Jossey-Bass Higher and Adult Education Series. San Francisco: Jossey-Bass.