In the past few months, we—Bill Hart-Davidson and me (Melissa Graham Meeks)—have been comparing notes as teachers using Eli Review with our very different student populations. Bill is teaching a senior capstone course at a selective research institution, and I am teaching a first-year writing course at an open admissions community college. Though we can point out many ways in which students’ prior experiences, habits, attitudes, and life circumstances affect their performance in our classes, we share a preoccupation with how to see their learning. And, we have some common strategies to share that we think could help many teachers!

Where do we see learning?

You might think that we see learning in different places because of who our students are. It’s true that, because Bill’s students are more experienced writers, he’s more likely than I am to see evidence of learning in drafts. With novice writers, even late drafts are noisy signals. They contain lots of interesting signs of progress but also lots of mistakes made along the way. Mina Shaughnessy’s landmark work Errors & Expectations is based on this idea that we can learn much from seeing where students struggle to meet the expectations of new audiences and new genres. Sentence level control slips more as writers attempt harder intellectual and rhetorical moves (NCTE Beliefs about Teaching Writing: “Conventions of finished and edited texts”).

But, what we have in common is a problem: If we wait on a draft to see evidence of learning, we’re often too late to impact that learning. Drafts show us where learners are, but they don’t tell us if writers are oriented appropriately. It’s the difference between Google Maps showing you a dot versus an arrow. While the draft shows us current progress, a writer’s feedback to other writers and their reflections on revision goals tell us if they are pointed in the right ways.

What are learning indicators?

We’ve obsessed over seeing students’ progress and orientation (their learning) so much that our conversations now have a Mad Libs routine:

- When I see X, I know my students have learned Y.

- I see X best in Z.

- To see more X in Z, I need to ask students to do A, B, and C.

- X = learning indicator

- Y = learning outcome

- Z = small bit of writing

- A, B, and C = criteria or steps

Defining X has been our secret sauce. X is a learning indicator. A learning indicator is the first sign that a student is practicing the skill that will lead to the desired performance outcome. It’s not everything we want to see, but it’s the most important thing to see at a particular moment in the process. If we can find X, we can see learning.

How do we design around learning indicators?

This routine of finding the treasure that is a key learning indicator has streamlined our work. As teachers, we assign about 15-20 reviews per course per term. We’ve also built ten sandbox courses for instructors in aviation, business, engineering, landscape architecture, first-year writing programs, and K-12 high-stakes testing. In all these contexts, we ask one question relentlessly:

What learning indicator matters now?

By focusing on which evidence would tell us that students are making progress and are headed in the right direction, we are doing backward design a little differently:

- From the learning outcomes, we identify the learning indicators.

- From the learning indicators, we design reviews around a few, very specific criteria.

- From the reviews, we figure out the smallest bit of writing that shows the learning indicators.

- From the small bits of writing, we assemble a project.

Now, for writing teachers especially, designing the project last seems odd because we’re accustomed to thinking of the project itself as the central animating force. Clearly, audience, purpose, genre, and process matter from the very start of backwards design. It’s helpful to divide a big project into stages like proposal, outline, source list, body paragraph, introduction, etc. Thinking about the small bit, however, doesn’t necessarily bring the learning indicator into focus.

What we’ve found is that designing around learning indicators—finding X— improves our design. If we start from the project, we put rapid feedback cycles in place for all the small bits, and we end up without enough practice of key skills to improve learning outcomes. When we start from learning indicators, we think more about how much practice is needed, and we design for improvement of writers’ thinking, not simply their drafts.

How do learning indicators become review tasks?

Designing review tasks around learning indicators is also a backwards design process. Once we know what learning indicators match the learning goals, we can identify relevant criteria. We then build the review task to help reviewers read for and evaluate according to those criteria using the different response types in Eli Review:

- Trait identification questions help reviewers read for criteria.

- Ratings help reviewers evaluate according to criteria.

- Comments guide reviewers in explaining their evaluations and suggestions related to the criteria.

The three examples below underscore how an effective review scaffolds the way reviewers read drafts. Notice how the reviews echo our MadLibs conversation with each other, except that this conversation is directed at students:

- Do you see X? If so, the writer has accomplished Y.

- How well is Y done?

- To improve Y, tell writers how achieve X through A, B, and C.

In this way, a review makes students better readers of learning indicators too. By making visible what counts as learning, we help students practice effectively. They practice when giving feedback and when using feedback to revise.

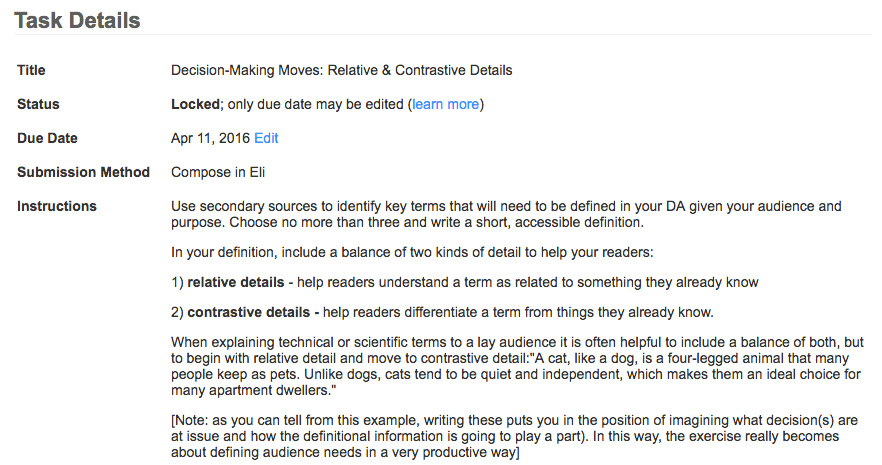

Learning indicator X is relative and contrastive detail in definitions of technical terms.

In Bill’s Special Topics Medical Writing Class, students are preparing health care decision aids. Readers of those decision aids are unfamiliar with key terms, so writers must offer definitions. When explaining technical or scientific terms to a lay audience, writers should include a balance of both relative (similar to) and contrastive (different from) details. In fact, effective definition writers begin with relative detail and move to contrastive detail: “A cat, like a dog, is a four-legged animal that many people keep as pets. Unlike dogs, cats tend to be quiet and independent, which makes them an ideal choice for many apartment dwellers.”

In this example, definition is the learning outcome, and the learning indicators are relative and contrastive detail. By focusing writers’ and reviewers’ attention on types of details and the balance of details in definitions, Bill scaffolds students’ learning in a complex project.

Having students work through definitions in this way provides the basis for a more sophisticated discussion of audience. There is no “perfect amount” of contrastive or relative detail for all definitions. Writers must decide is how much of each is most appropriate for the audience they are working with.

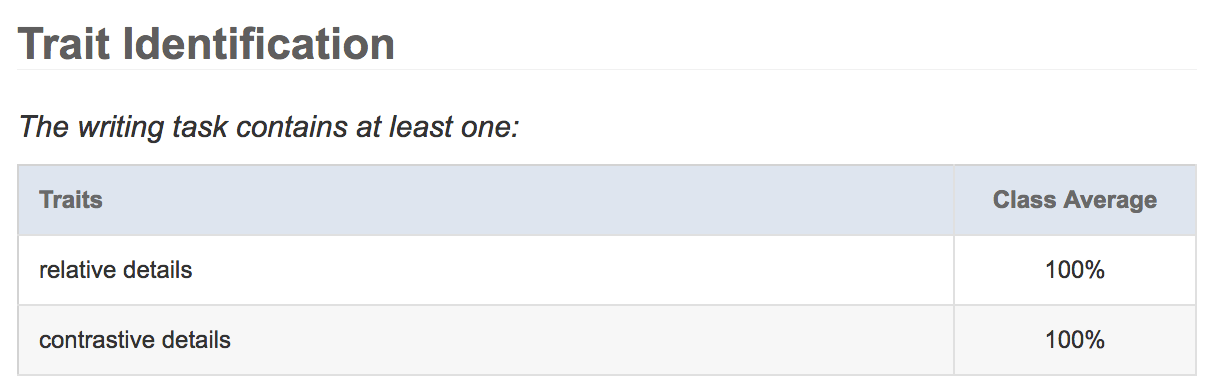

Learning indicator X is topic sentences, which contribute to the paper’s organization.

In Melissa’s first-year composition course, students have built up to a first draft after combining paragraphs from different prior tasks. All the drafts need work on organization. For readers, topic sentences help make the flow of ideas clear. Topic sentences that point back to the thesis/previous paragraph and point forward to the main ideas of the current paragraph are learning indicators for organization. This review—one of four reviews students completed to give feedback on a full first draft—helped writers see if readers could discern their organization just from topic sentences.

Reviewers’ perspective on organization from topic sentences helps writers know where to start revising. As writers adjust the topic sentences to make the progression of ideas clear, they’ll discover other changes they need to make with supporting details and transitions.

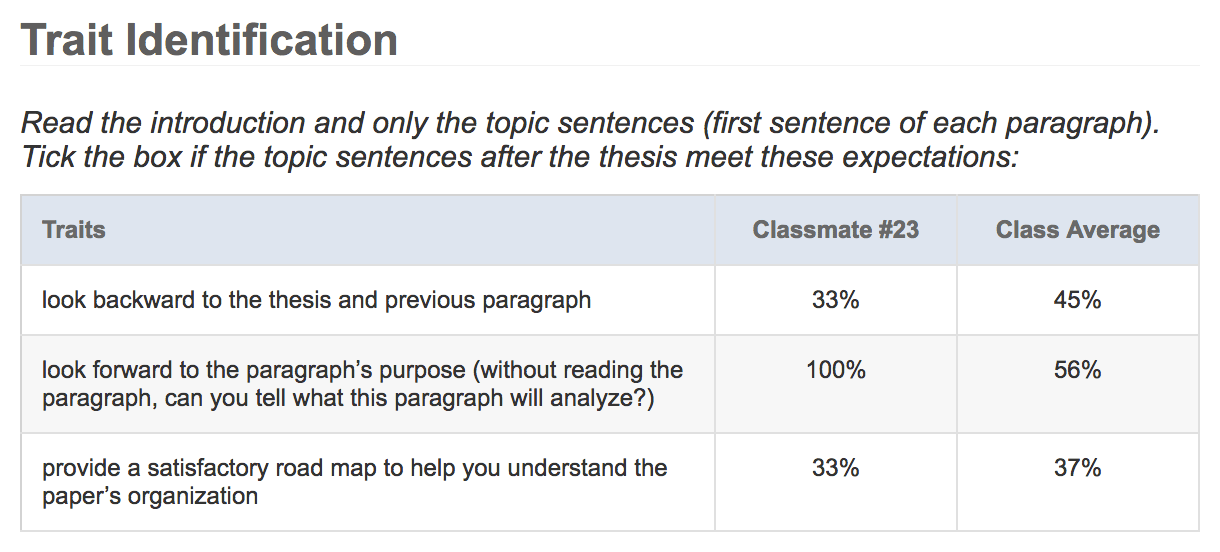

Learning indicator X is well-supported claims of fact and claims of value, which readers recognize as depth of reflection.

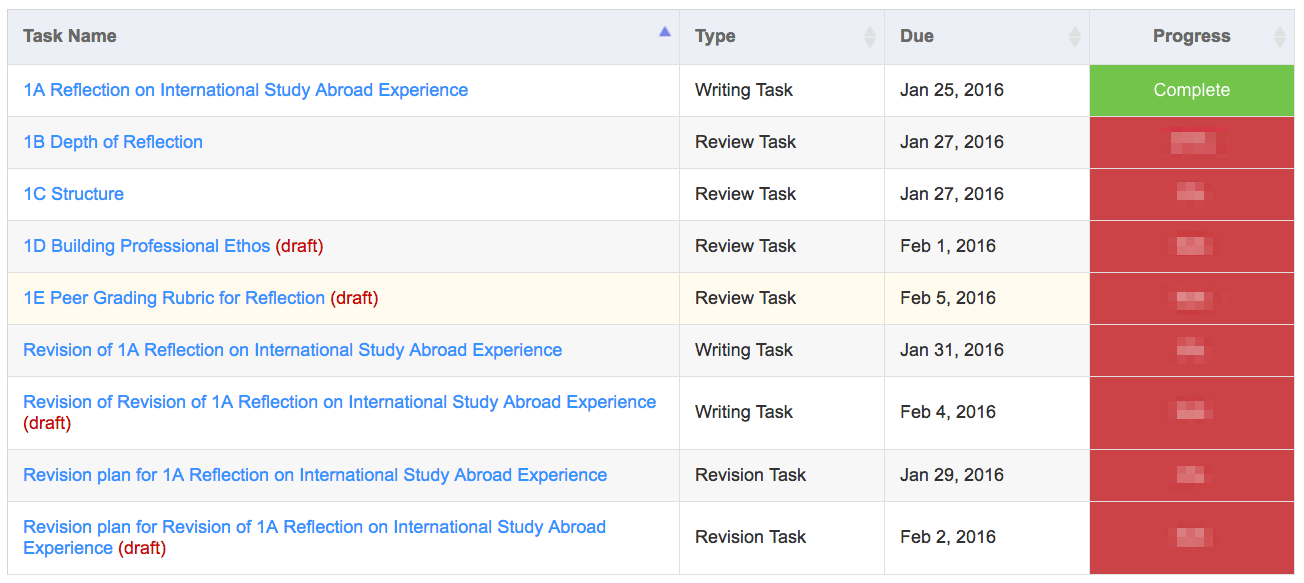

Third, in a sandbox course built for a capstone business writing course, we developed a series of review tasks designed to improve students’ reflections on the study abroad experience.

The first task in the series focuses on what the rubric calls “depth of reflection.” Bill pointed out that depth comes from claims of fact and claims of value that are well-supported by evidence. Thus, the task we designed asks reviewers to look for certain types of claims of fact and of value and then to rate whether there’s sufficient evidence for each. The task itself points out ways writers can expand their claims when revising their reflections.

Review Instructions

The purpose of this review is to help the writer improve the depth of this reflection on the international study abroad experience by evaluating the claims of fact and claims of value in the personal narrative.

- Claims of fact establish that something happened or exists in a certain way.

- Claims of value articulate why something matters.

The personal narrative reflection asks writers to make a claim of fact describing an experience and then to make a claim about its value for learning.

Your task as a reviewer is to tell writers what claims they are making and how to make them more compelling.

Trait Identification Set #1 – Prompt and Traits

Read the draft and look for these claims.

Claims of fact establish that something happened, existed, or is true. In a reflection paper, claims of fact describe experiences so that readers understand what happened.

Writers are NOT required to use all of these. Tick the box if you see at least one example from this list of options:

- Claims about places or events on the itinerary

- Claims about places or events not on the itinerary

- Claims about people or relationships

Trait Identification Set #2 – Prompt and Traits

Read the draft and look for claims of value.

Claims of value assert that something is good or bad, more or less desirable, or belongs in one category instead of another. In a reflection paper, claims of value indicate why the experience mattered and what was learned from it.

Tick the box if the draft includes these claims of value:

- Claims about the skills, strategies, and knowledge gained

- Claims about the communication and/or relationship-building process

- Claims about how experiences reflect or challenge theories, concepts, or strategies

- Claims about how experiences translate into future career opportunities and leadership skills

Embedding options in the review provides a strong scaffold for helping reviewers read according to criteria and helping writers decide their next steps based on feedback.

In each of the three examples above, the review focuses on a single learning outcome: definitions, organization, depth of reflection, respectively. The review prompts use checklists, ratings, and comments to direct reviewers to look for specific learning indicators of those outcomes. Such review activities increase time-on-task for a narrow but high-impact skill, making sure that reviewers’ feedback and our debriefing with students about their is goal-oriented and productive.

What do we get by focusing on learning indicators?

A narrow focus on learning indicators mean that everyone—students and instructors—can see if the most important learning is happening. By asking, “What learning indicator matters now?” we start designing for

- the thing we need to see to better learning,

- the thing reviewers need to see to give better feedback, and

- the thing writers need to see to make better revisions.

Thinking first about learning indicators and how they should be sequenced helps us scaffold students’ work. We end up with reviews that follow the considerations outlined in our professional development module on “Designing Effective Reviews”:

- Consider the cognitive load.

- Start with learning goals and design backward.

- Prompt reviewers with specific, detailed questions.

We end up achieving those larger goals by focusing on a more narrow one. Building projects up from learning indicators rather than drilling down to them has changed the way we talk about our work with each other and the way we frame reviews for our students. Because learning indicators are in the forefront of our daily class activities, we are more confident that our feedback-rich classrooms are helping students practice key skills. We’re more confident because we and, more importantly, they can see learning.