Melissa Meeks is the Director of Professional Development for Drawbridge, Eli Review’s parent company. In this post, she reflects on how she recently stepped back into teaching and what she’s learned about tracking engagement by taking an evidence-based approach.

Primed to be an Evidence-Based Teacher of Writing

I remember where I was the first time I learned about Eli Review. In December 2011, I was a media consultant for Bedford/St. Martin’s, and I was in the Boston office for year-end reviews. Nick Carbone pulled me into an office and gave me the demo. As he wrapped up, I told Nick: “That would have made me a better teacher.” One look at the single-screen view of every comment given and received by each student gave convinced me that Eli offered efficiency and visibility I couldn’t get with any other tool.

I remember where I was the first time I learned about Eli Review. In December 2011, I was a media consultant for Bedford/St. Martin’s, and I was in the Boston office for year-end reviews. Nick Carbone pulled me into an office and gave me the demo. As he wrapped up, I told Nick: “That would have made me a better teacher.” One look at the single-screen view of every comment given and received by each student gave convinced me that Eli offered efficiency and visibility I couldn’t get with any other tool.

Fast forward four years. I work for Drawbridge because I’m convinced that rapid feedback cycles where writers share small bits of writing, where reviewers respond in targeted ways, and where writers build careful revision plans provide a better scaffold for teaching writing. I also know from hundreds of conversations with instructors how powerful it is to see students’ thinking as reviewers give feedback and as writers use feedback to plan revision. So, it’s easy to see why in my return to the classroom after a few years in textbook publishing I was really primed to think of myself an evidence-based teacher.

Looking at Engaged Writing

Yet, despite my training and pedagogical beliefs, I struggled every single day of my first semester back in the classroom in Fall 2015. I’m still struggling in Spring 20016 too! I’m struggling because the evidence Eli Review showed me was that my chief problem was student disengagement, specifically:

- missing work

- work completed too late to benefit from peer learning.

These disengagement problems occupied my time as a teacher. I sent class-wide reminders. I reached out to students individually. As required by the department, I filled out early alert forms and partnered with advisors to get students on track. I kept the class course site updated. I pulled them aside on the days they actually came to class. I conferenced. I talked to them on the phone.

Basically, I chased “delinquent writers.” That’s a term we use at Eli to talk writers whose drafts aren’t submitted and thus can’t be reviewed. Delinquent writers affect group members’ progress. A reviewer can’t provide feedback on a missing draft. In my job at Eli, I help instructors understand that, as review coordinators, they have to put delinquent writers in a place where they aren’t holding others back. I probably say that twice a week.

I didn’t realize until my first semester was almost over the extent to which I let delinquent writers hold me and prepared writers back. In my class data, I was too aware of who didn’t turn in their work or complete their reviews. I spent too much time trying to get delinquent writers to become simply writers. I have a responsibility to seek out the absentees and the delinquent writers, drawing them toward academic writing as best I can. I’m ethically accountable for the ways I help students move through the gate of a required writing class. Still, delinquent writers shouldn’t be the spotlight of the course.

Looking for Impact on Writers

My greatest responsibility is not to delinquent writers but to prepared writers. Because my attention was riveted on those who didn’t attend or submit work, I directed our energy to improving the submitted drafts rather than increasing the effectiveness of peer learning. In class, I modeled drafts—a lot. In revision plans, I commented heavily. I tried to make sure writers had what they needed to revise. Managing draft improvement seemed like a reasonable approach to addressing the needs of both prepared and delinquent writers.

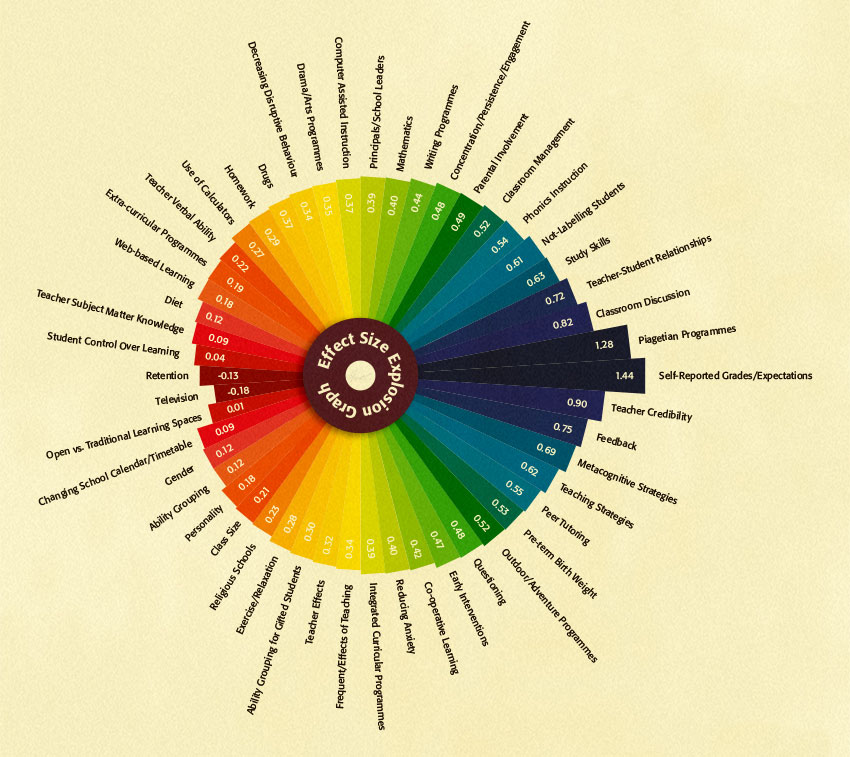

But, I was wrong. Managing draft improvement didn’t have a measurable impact on students’ learning. Over the break, I read John Hattie’s Visible Learning for Teachers: Maximizing Impact for Learning, which updates and makes more practical his earlier book with Gregory Yates Visible Learning and the Science of How We Learn. Hattie asks teachers to “know thy impact” and challenges them to make high-impact interventions. According to Hattie, that means interventions that have d > 0.40 effect size. (Get the scoop from Visible Learning.)

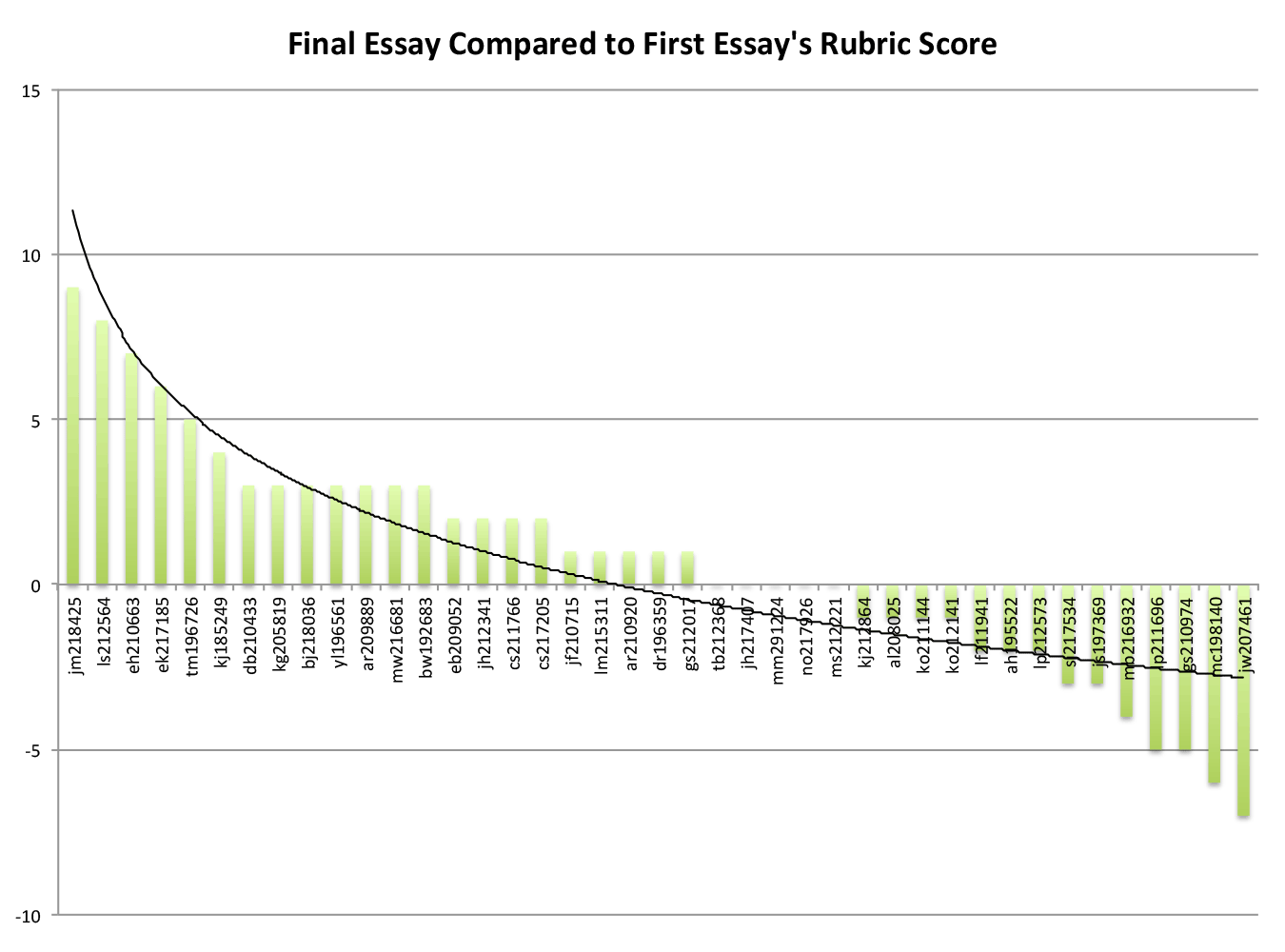

My class was full of high-impact interventions, especially formative evaluation (0.90) and feedback (0.75). So, I tried to follow Hattie’s advice and “know my impact.” I looked at the change in students’ overall rubric scores across four assignments. Unless students failed to meet the minimum requirements in the first paper, students’ first paper’s grade was a mirror of their final paper’s grade, unless it went down. That’s not good.

Looking for Impact on Reviewers

After finding that I didn’t convert many C writers to B writers or B writers to A writers, I wasn’t ready to give up the quest for knowing my (lack of) impact. Better reviewers, better writers, right? So, I checked to see if there were any trends between students’ engagement in Eli Review and their rubric scores.

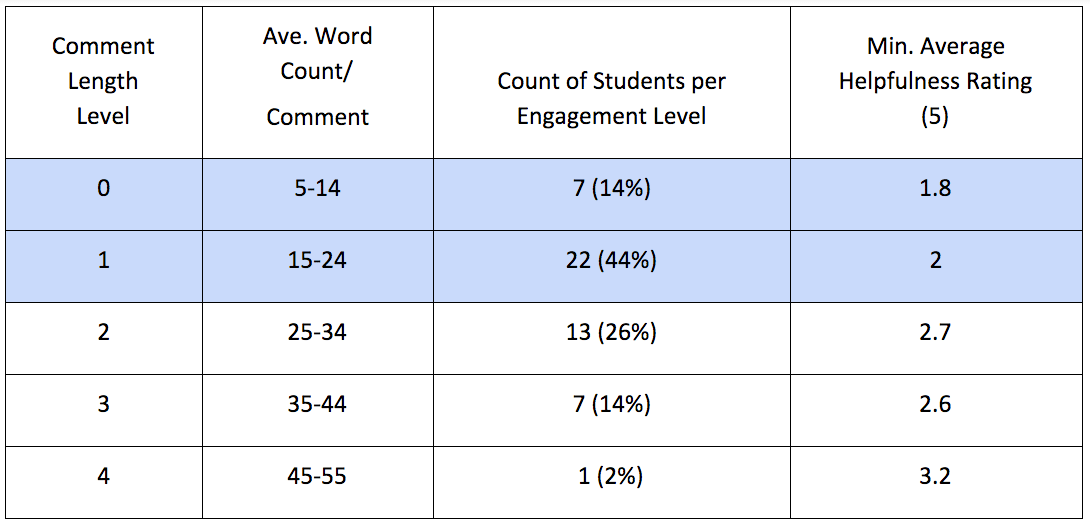

To do that analysis, I had to figure out what counted as a being a “better reviewer” in Eli Review: review completion, total number of comments, total word count of all comments, average word count of comments, helpfulness ratings, or endorsements. Using Eli’s comment digest, I analyzed a semester’s worth of comments, and I tried everything. For my class, average word count per comment helped me best distinguish between reviewers who engaged fully and minimally, and the figure below shows the five levels I identified.

This table shows that reviewers whose comments were about 25 words long (a sentence) were judged by the writers who received them as 3+ out of 5 possible stars; writers did not usually find comments with fewer than 25 words to be even 3 stars helpful. That is, when reviewers chose not to or didn’t know how to explain their insights about the writer’s draft in at least a sentence, writers did not get helpful feedback. Since 60% of the reviewers wrote shorter comments, most writers did not get helpful feedback.

Is 25 words a magical number for comment length? No, though I did find a similar pattern in another instructor’s class. The magic of helpful comments is enough detail to understand reviewers’ suggestions, and word length is a good indicator.

That comment length mattered surprised me. Because delinquent writers got so much of my attention, I didn’t pick up on reviewers’ disengagement with commenting. The number of comments they offered often met my minimum expectations, but it was the length of their comments that did not meet the minimum of writers’ needs.

Looking Back on Pedagogy

It’s not that I ignored commenting. I taught “describe-evaluate-suggest,” which is a pattern of commenting Bill Hart-Davidson explains in Eli’s student materials on giving helpful feedback. I modeled helpful comments frequently from peer exemplars; I endorsed; I announced peer norms for comment counts; I rewarded effort with points. At the end of the term, I even assigned a scripted reflective essay in which students argued that they improved as a reviewers by selecting examples from their comment digest in Eli. Their reflections were my first inkling that something was amiss among prepared writers who were also engaged but unhelpful reviewers.

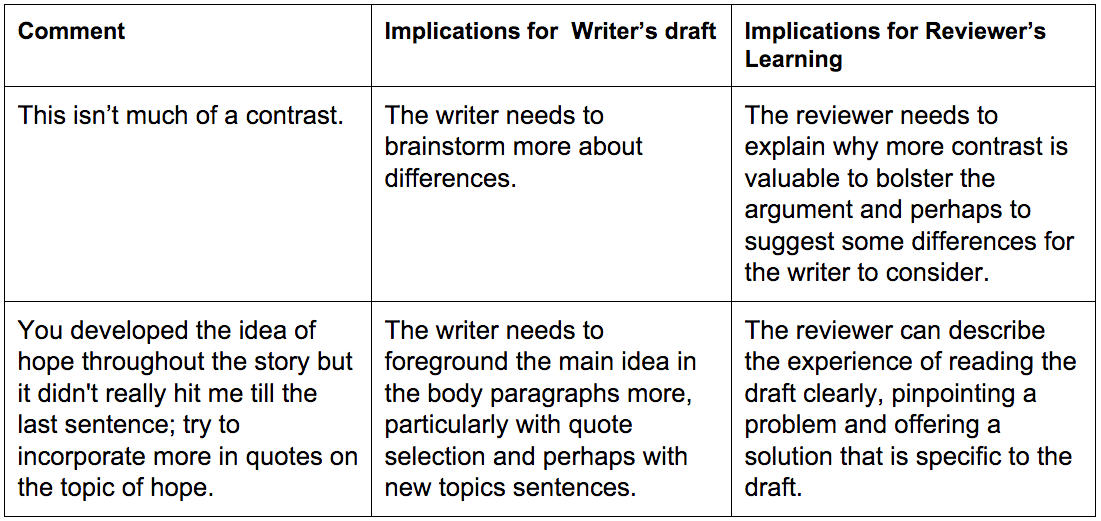

In retrospect, I didn’t teach commenting often enough or directly enough to change the way students read and talked about writing. I read reviewers’ comments to tell me about writers’ needs. I didn’t read reviewers’ comments to tell me about their learning, about the ways they could (or could not) articulate how well a draft met criteria and what revision strategies were needed. The table below shows those two ways of reading comments.

Primed to be an Evidence-Based Teacher of Feedback

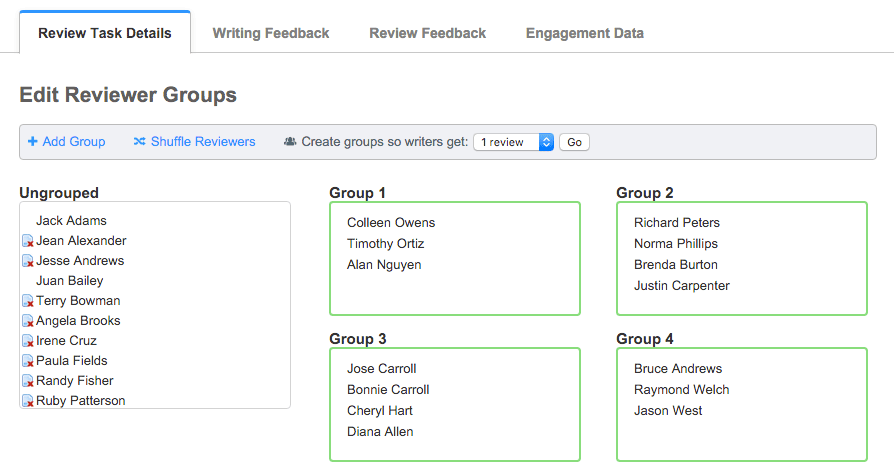

This semester, I again have the same issues with delinquent writers, but I’m trying to invest equal time in helping prepared writers become better reviewers. During class, I’m doing more of the activities for teaching commenting that I learned from other Eli Review instructors.

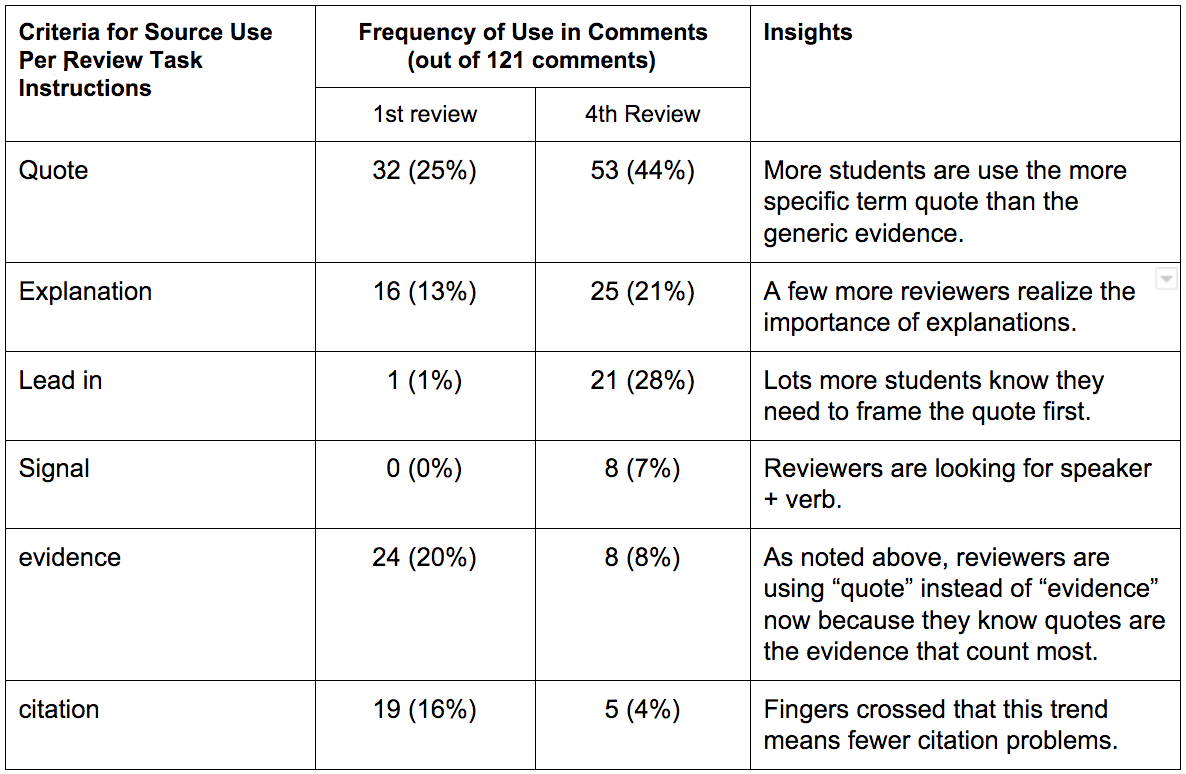

I’m also simplifying review to focus on one skill (plus the minimum requirements for completion). Although I didn’t find an effect size difference in any comparison of final projects, I noticed that use of evidence made a letter grade difference in final drafts. For the first month of this term, I assigned the exact same review about integrating sources as students wrote about three different stories. The narrow focus has had a measurable impact. The chart below shows what problems reviewers saw in the drafts they read across three weeks of peer learning work in Eli Review; this table summarizes the trait id checklists across three tasks.

Wait, most of those numbers go up! How can more problems in drafts be good? Reviewers are getting more accurate, and that’s great! They’re still probably underreporting problems with integrating sources, but every one they catch improves the draft. And every time they see problems in someone else’s draft, the writing coach in their heads gets smarter.

Also, because we’ve hammered lead in, signal, quote with careful punctuation, in-text citation, explanation for a month, they have more confidence in talking to each other about these criteria. Their comments are longer and more helpful. After analyzing the comment digest from the most recent review, I can tell that all but five reviewers (prepared writers) averaged 4+ comments of 25+ words each. They’re also using the language of effective source use in their comments—as often to praise writers as to make suggestions.

This evidence shows that reviewers are looking for the parts of the draft that will be most helpful to writers as they revise. It shows that more of them can elaborate in their comments to writers because they’ve learned what’s possible to say and how to say it. Or, as Bill Hart-Davidson likes to say, they have an expanded meta-discourse for writing. It shows that the majority of reviewers are engaged in learning.

By looking at feedback, I’m better able to pay attention to prepared writers so that I can help them engage in helpful commenting and become better reviewers; through that practice, they’ll become better writers.

Now, the data also shows that 10 delinquent writers aren’t even minimally engaged in writing so that they are missing out on review too. Let me email them one more time.