This is the second in the Investigating Your Own Course series.

We launched our Investigating Your Own Course series last week to demonstrate how Eli Review’s analytics show us, in a summative way at the end of a course, the secret sauce to peer learning: the effort students put in and the choices they make across time.

In real time, Eli Review provides formative feedback and engagement analytics that surface what is happening during a single review. In aggregate, however, this data helps identify overall trends and patterns.

If you’ve been following the Twitter hashtag #seelearning, you’ve noticed that we’ve been looking in detail at the Eli Review’s Course Analytics–“Engagement Highlights” and “Trend Graphs”–in an attempt to answer two important questions with more nuance:

- To what extent was this course a feedback-rich environment?

- How much review and revision activity were students assigned?

- How much effort did they put into review?

- Did all students contribute equally?

- To what extent was this feedback-rich environment helpful?

- How many comments received instructor endorsement?

- How helpful on average did writers find the feedback they received?

In this post, we’re collecting the bite-size bits of evidence tweeted last week that were taken from Bill Hart-Davidson’s Technical Writing course. We’ll look closely here at that evidence for answers to the first question, and we’ll follow up next week with evidence for the second one.

To follow the insights here using data from your own course, find your Highlights report by following these steps:

- Click on the name of a current or previous course from your Course Dashboard.

- From the course homepage, click the “Analytics” link. “Engagement Highlights” are shown first.

Looking for Evidence of a Feedback-Rich Environment

Feedback doesn’t just happen. Instructors have to design for it. Even then, feedback doesn’t just happen. Students have to commit to offering helpful feedback.

Thus, when we look for evidence of a feedback-rich environment, we are looking at instructional design as well as student participation. The Highlights report shows both.

For example, this Highlights report from Bill Hart-Davidson’s Technical Writing class gives an overview of the write-review-revise cycles he designed. (His review tasks are published and free to re-use in our Curriculum Resources.)

How much review and revision activity were students assigned?

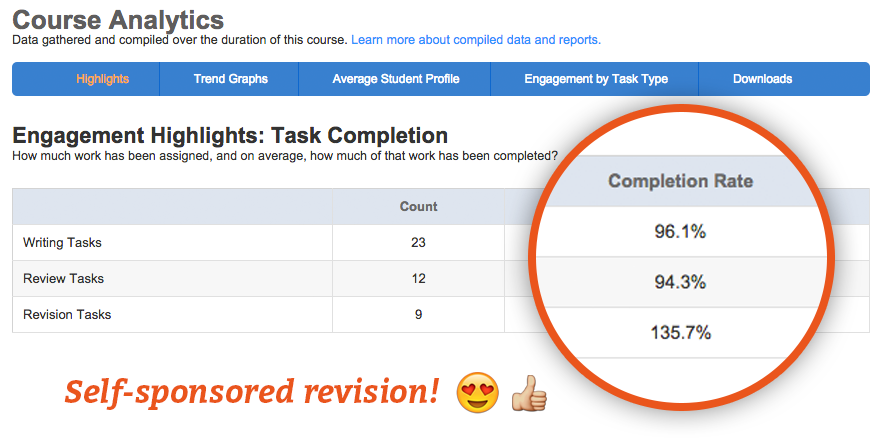

According to data from the “Task Completion” section, Bill assigned 23 writing/revised writing tasks, 12 reviews, and 9 revision plans. For most assignments then, his students were getting feedback, making a plan about how to use that feedback, and then revising accordingly. Bill clearly values feedback and revision, and his assignment counts prove it. There’s nothing magic here except that he (and his students and you) can see the net effects of his assignment design at a glance.

How much effort did they put into review?

The magic really is Bill’s students. The completion rates clearly show that they put in effort. More than 94% of them did all assigned tasks. Impressively, the 134% completion rate for revision tasks indicates that some students saw the value in selecting-prioritizing-reflecting and created revision plans without being required to do one. Wouldn’t you love to know that about your class?

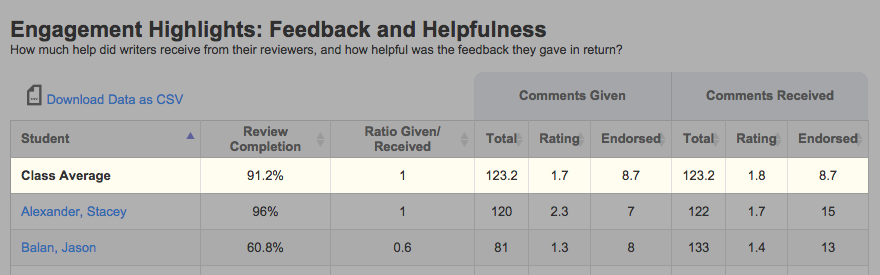

When we look at the “Feedback and Helpfulness” section of this report (note that all names are falsified but the data from Bill’s class is true), and focus in particular on the “Class Average” row, we can see that students gave on average 123 comments during the term, which is a strong level of participation in 13 reviews.

The completion rates and the quantity of comments suggest that students were highly engaged with assigned tasks.

Did all students contribute equally?

These averages let us get a sense of how engaged the whole class was, but we also need to consider individual differences. The “Feedback and Helpfulness” section allows us to compare students’ engagement during review.

When we sort by total comments given (over the duration of the term), we see that the least engaged student offered 78 comments while the most engaged student offered almost double that at 154 comments: That’s a big gap!

NOTE: Bill can explore this gap using Eli Review’s student-level analytic reports; for now, we’ll concentrate just on evaluating the extent to which this course provided a feedback-rich environment.

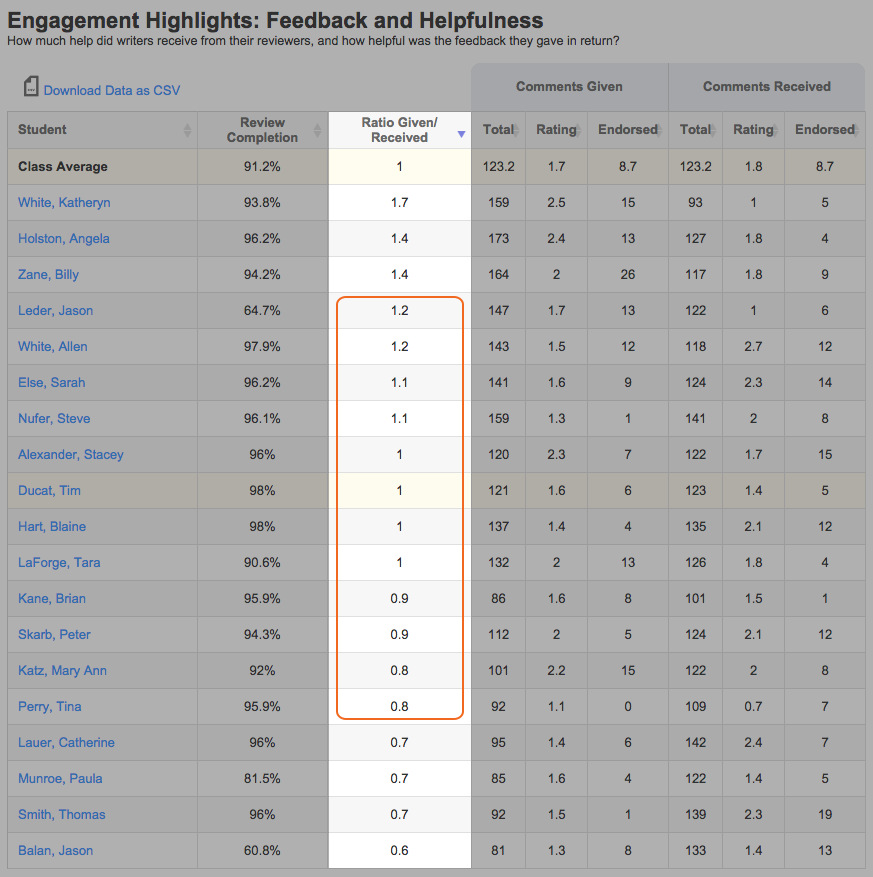

It’s clear that there were major differences in the quantity of comments students gave. Did student who offered few comments also receive few comments? Or, to borrow Oakley’s metaphor, did group members absorb the behaviors of couch potatoes and hitchhikers or mirror them back? The “Ratio of Comments Given/Received” gives some indication that most students in the class gave as many comments as they got.

By sorting the engagement table using the ratio column and then bracketing those students who were under 0.8 or over 1.2 (±0.2 from perfectly repricoal), we can see that four students likely under-contributed feedback and three students likely over-contributed. Both patterns are damaging to students’ own learning and to the group dynamic. Instructors can use these analytics to talk to students about effective group work.

Though we can detect some differences in students’ contributions, there are no outliers–or at least none of Oakley’s hitchhikers.

NOTE: Quantity is never the whole story. Bill can explore the quality of the feedback students gave through the comment digest or other analytics.

So, to what extent was Bill’s course a feedback-rich environment?

Using three metrics from the Highlights report in Bill’s class-task count, completion rate, and ratio of comments given/received-we’re able to see strong evidence of a feedback-rich environment:

- The data indicates that Bill designed his course as a feedback-rich environment.

- Students completed reviews for most writing tasks.

- Students used feedback to make revision plans for most writing tasks.

- Students revised many writing tasks.

- The data indicates that students fully participated.

- More than 94% of students completed all assigned tasks.

- On average, students offered 123 comments during the term.

- The majority of students gave as many comments as they received.

These insights provide evidence that Bill designed and facilitated a feedback-rich environment. They also show that students “bought into” peer review and put significant effort into the comments they gave each other.

Tuesday, through other analytics, we’ll explore if that effort resulted in helpful feedback.

We hope you’ll follow along with us and investigate your own classes too. Please share your findings on Facebook or Twitter with the hashtag #seelearning!

Interested in learning more?

- Want to learn more about interpreting your course data? See the Course Analytics user guide or read more about using formative feedback as evidence of learning.

- New to Eli Review? Here’s a breakdown of how to get started.

- Want to talk to a human about how to interpret your course data or how to get started? Contact Melissa Meeks, director of professional development.